ABSTRACT

Beans are a legume that is widely grown and consumed globally, being the staple food for humans in developing countries. Nitrogen (N) is the most limiting nutrient for yield and foliar analysis is crucial to ensure a balanced nitrogen fertilization. However, conventional methods are time-consuming, requiring new technologies to optimize the supply of N. In this work, the performance of two deep learning models in the classification of leaf nitrogen in beans using RGB images was evaluated and compared. The BRS Estilo was used in a greenhouse in a completely randomized design with 4 doses of nitrogen (0, 25, 50 e 75 kg N ha-1) and 12 reps. The image bank was composed of 4 subfolders, each containing 500 images of 224x224 pixels obtained from plants grown under different doses of N. Matlab© R2022b were used for processing of the models. The performance of ResNet-50 was superior when compared to CNN, with an accuracy test of 84%, while the value observed for CNN was 82%. The use of images combined with deep learning can be a promising alternative to slow laboratory analyses, optimizing the estimation of leaf N and providing a rapid intervention by the producer to achieve higher productivity.

KEYWORDS

CNN; ResNet-50; nutritional condition; optimization; artificial intelligence

INTRODUCTION

Beans (Phaseolus Vulgaris) are a legume of great importance, used both for human food and animal feed (Yu et al., 2023). Grown in different regions around the world, this crop plays a key role in ensuring food security and livelihoods for millions of people. Global bean production reached approximately 28 million tons in 2021 (FAOSTAT, 2023). Brazil is considered the second largest producer in the world, with the states of Paraná, Minas Gerais, Mato Grosso and Goiás standing out as the main producers, responsible for 64% of national production during the 2020/2021 harvest (CONAB, 2023). In addition to being rich in protein, both in its seeds and in its straw, beans stand out for the action of rhizobia in their roots, which are highly efficient in nitrogen fixation, contributing to the improvement of soil fertility and erosion control (De Ron & Rodiño, 2024). These factors make beans an essential component in agricultural systems around the world (Muoni et al., 2019).

Nitrogen is a crucial nutrient to obtain high yields in bean crops because it is closely linked to the chlorophyll molecule, acts in photosynthesis and forms proteins. In this sense, it is necessary to establish a constant monitoring of the content of this macronutrient in the plants, aiming at a precise management of nitrogen fertilization in the crop. Over-fertilization can cause a number of problems, including increased costs, wasted resources, plant lodging, and environmental pollution (Sun et al., 2023). As part of standard practice for monitoring N status in plants, experts often perform visual inspections of leaves or take laboratory samples (Ding et al., 2024). However, this process is time-consuming, expensive, and error-prone, as it requires the presence of qualified professionals to visit the field and examine the plants in person, which compromises both the efficiency and scalability of monitoring, especially on large agricultural properties. In addition, human visual observation is subject to variations, resulting in inconsistent diagnoses and lack of objectivity (Wang et al., 2022).

In view of this, the need for more efficient and accurate technologies arises, such as computer vision, which has stood out in the transformation of the agricultural industry (Ghazal et al., 2024). With advanced deep learning algorithms, these tools offer objective, accurate, and scalable solutions for plant identification, disease diagnosis, and nutritional deficit analysis, among other applications. In this scenario, convolutional neural networks (CNNs) have shown promise, due to their ability to learn complex patterns in large visual datasets by extracting detailed features from the training images (Singh et al., 2020).

Over the past few decades, CNNs have played a key role in the evolution of visual recognition, due to their increasing accuracy from their initial architectures to the sophisticated deep learning models we use today (Shin et al., 2021). CNNs have overcome the limitations of traditional approaches, excelling in a wide range of tasks, such as image classification, object detection, and semantic segmentation (Teng et al., 2019). This process of evolution makes CNNs essential tools as an opportunity to discover more detailed information in complex visual data and thus transforming several industries and changing the way the visual world is dealt with (Ding et al., 2024).

Several studies have shown that convolutional neural networks offer a promising approach for the recognition of visual patterns in plants and for the detection of nutritional deficiencies. In a comparative study, Tran et al., (2019) evaluated the use of deep CNNs in the prediction and classification of macronutrient deficiencies in tomato plants. The results, obtained under real-world conditions, revealed a significant increase in accuracy rates, reaching 91% with the ensemble average, compared to 87.27% for the Inception-ResNet v2 model and 79.09% for the Autoencoder. Similarly, (Ramos-Ospina et al., 2023) applied deep transfer learning to the classification of phosphorus nutritional states in corn leaves, achieving an accuracy of 96% with the DenseNet201 model.

In addition to the various applications of deep networks, the time spent during training and the total cost of processing images require the development of more efficient algorithms, positioning ResNet-50 as a viable alternative due to its residual learning capability and non-degrading performance (He et al., 2016). It is framed as a deep convolutional neural network with various structures designed for pattern recognition in images and objects (Arnob et al., 2025). Accordingly, ResNet-50 has been explored in various fields of knowledge, showing importance in several segments such as in the agricultural sector, for example, in the identification of diseases in plants (Askr et al., 2024), mineral nutrition in strawberry plants (Regazzo et al., 2024), and classification and detection of the quality of rice cultivars (Razavi et al., 2024), in the environmental sector such as in the recognition of waste for sustainable urban development (Zhou et al., 2024), in the medical sector, with early detection and classification of Alzheimer's disease (Nithya et al., 2024), and in the energy sector with identification of faults in distribution networks (Wang et al., 2025).

This residual network (ResNet) has versions such as ResNet-18, ResNet-50, and ResNet-101, with 18, 50, and 101 convolutional layers respectively, featuring skip connections and layer freezing between the residual connections, allowing important features to bypass some of them and proceed to the upper layers, thus transferring important resources in feature extraction and network learning (Bohlol et al., 2025).

Therefore, the use of neural networks to classify leaf nitrogen levels in common bean appears as a promising approach, which can provide rapid quantification of leaf N from images, resulting in the optimization of fertilization management, increased productivity and sustainability.

In this sense, the objective of this work was to evaluate and compare the performance of two deep learning architectures (CNN and ResNet-50) in the classification of the nutritional condition of the bean crop submitted to nitrogen application (0, 25, 50 and 75 kg ha-1) using RGB digital images.

MATERIAL AND METHODS

Study site and experimental design

The experiment was carried out at the Faculty of Animal Science and Food Engineering of the University of São Paulo (Faculdade de Zootecnia e Engenharia de Alimentos da Universidade de São Paulo - FZEA-USP), located in the city of Pirassununga/SP - Brazil in a greenhouse. The facility is geographically located under the following coordinates: 21°58'39.8'' S, 47°26'23'' W. The climate in the study area is classified according to Köppen as humid subtropical type (Cw) with an average annual temperature of 20.6ºC and average annual rainfall of 1238mm (Alvares et al., 2013).

A completely randomized design was used with four levels of nitrogen fertilization (0, 25, 75 e 100 kg N ha-1) and 12 repetitions. The seeds of the BRS Estilo cultivar were provided by the Brazilian Agricultural Research Corporation (EMBRAPA), located in Santo Antônio de Goiás, Goiás/Brazil, an institution that works with bean breeding. As substrate, a mixture composed of 43% soil (Quartzarenic Neosol), 28.5% crushed sugarcane straw and 28.5% tanned cattle manure was used. The combination of these materials had the purpose of keeping the soil uncompacted, allowing the correct development of the root system of the plants. Table 1 contains the physicochemical properties of each component of the substrate.

As starter fertilization, 40, 140 and 140 kg ha-1 of N, P2O5 and k2O, respectively. Nitrogen topdressing was based on the technical recommendations of the fertilization manual of the Agronomic Institute of Campinas (Instituto Agronômico de Campinas - IAC) for an expected yield greater than five tons per hectare (Cantarella et al., 2022). Urea (46% N) was used as nitrogen fertilizer. The plants were subjected to doses at the phenological stage V4, characterized by the full development of the first trifoliate leaf and the development of the first secondary branches.

Sowing was done in 5L plastic containers fully filled with the substrate, in which four seeds were deposited for germination. After 15 days, the two smaller plants were removed, leaving only the two plants with the highest vegetative vigor in each pot. Inside each pot, the two plants were spaced 10cm apart and each row of pots was spaced 0.4m apart, equivalent to a field density of 250,000 plants per hectare. Throughout the experimental period, the water supply of the plants was carried out with the application of a daily water depth of 5mm. Temperature and humidity data (average, daily maximums and minimums) were collected daily using a digital thermo-hygrometer, as shown in Figure 1.

Leaf collection, assembly of the image bank and quantification of leaf nitrogen

At 32 days after sowing, 36 leaflets from 12 plants were sampled for each treatment. Immediately after collection, the material was packed in sealed plastic bags, kept in a 20L thermal box containing ice and transported to the Laboratory of Machinery and Precision Agriculture (Laboratório de Máquinas e Agricultura de Precisão - LAMAP) for image acquisition. To this end, sheets of paper towels were used to remove excess water or dust, keeping them clean. The acquisition of the initial images was done with a high-resolution HP Scanjet 3800 scanner, used in the RGB scanning of the images, with a resolution of 1200 DPI (dots per inch) and JPG format (Joint Photographic Experts Group). Using a script developed in the Matlab© R2022b software, the scanned images of the bean leaves were cut into blocks of 224 × 224 pixels, thus generating an image bank (Figure 2).

Other work also applied image resizing to reduce the size from 2048 × 1536 and 2736 × 1824 pixels to 224 × 224 pixels (Kawasaki et al., 2015; Oppenheim & Shani, 2017). In this work, 500 images of size 224 x 224 pixels were manually selected per treatment and stored in folders labeled with the names of the treatments (T1: 0 kg ha-1 N; T2: 25kg ha-1 N; T3: 50 kg ha-1 N; T4: 75 kg ha-1 N). This procedure aimed to obtain images that would provide an ideal visualization of the patterns generated by the doses in the leaves. Soon after reading, the leaflets of each treatment were joined, placed in labeled paper bags and dried in an oven with forced ventilation under constant temperature of 65°C to constant weight. A mill was used to grind the dried material into a fine powder. After being crushed, the material was sent to the laboratory for quantification of the nitrogen content. The Kjeldahl method was used as a procedure to measure the leaf content of N (Lynch & Barbano, 1999).

Description of the models and performance analysis

The models were implemented using scripts in the Matlab© R2022b software. As hardware for processing, a Dell G15 5530 nootebook was used, equipped with an 13th Gen Intel(R) Core(TM) i5-13450HX processor at approximately 2.4Ghz and 16Gb of RAM, using the GPU NVIDIA GeForce RTX 3050 GPU (6GB VRAM). The basic configurations of the CNN and ResNet-50 are described, respectively, in Figure 3.

The CNN is designed as a sequential model, with four two-dimensional layers of convolution (2D convolutional), each with distinct sizes and numbers of filters. These layers are considered as basic for a CNN model, used in the extraction of characteristics from the input images, allowing the learning of visual patterns (such as colors, shapes and textures), from the simplest to the most complex, successively (Yamashita et al., 2018). Using the Rectified Linear Unit activation function on convolution layers allows you to better handle nonlinearity problems at a lower computational cost (Taye, 2023). The rectangular filters (3 × 7 and 7 × 3) situated, respectively, in the second and third convolution layers were intended to capture possible vertical and horizontal patterns. Batch Normalization improves CNN training speed and prevents gradient explosion or disappearance (Luo et al., 2023). Then there is a subsampling layer (2D MaxPooling), used to reduce the dimensionality of the activation maps, saving computational resources and preserving the most relevant data (Taye, 2023). Finally, there is the output (Fully Connected), or classification layer. The softmax activation function used in this layer turns your neurons into probabilities assigned to each class, effectively turning the model into a classifier.

Another deep learning architecture adopted was ResNet-50. This model is a Deep Neural Network (DNN) commonly used for image classification tasks. It was designed to address the problem of vanishing gradients in deep networks by incorporating skip connections, which allow gradients to flow directly from earlier to later layers. ResNet-50 consists of 50 layers and uses residual blocks to efficiently propagate information across the network (Hassan et al., 2024).

Both models were used with 30 epochs, batch size 64, initial learning rate of 0.001, ValidationFrequency 50, and cross-entropy loss function. Transfer learning was applied to the pre-trained ResNet-50 using the ImageNet dataset, while the custom CNN was trained using the dataset from this study itself, with the same hyperparameters as ResNet-50. To mitigate overfitting, batch normalization was adopted as the main measure. For each model, 1,600 blocks of 224×224 pixels was used for training (80%), 200 for validation (10%) and 200 (10%) for testing.

The performance of each model was evaluated and the performance of one compared to the other was performed using the following metrics: Confusion matrix, area under the curve, accuracy, sensitivity or recall, specificity, precision, recall, F1-score, and g-mean. The basic concept of each of them was presented as described by (Shaheed et al., 2023). During the training process, the metrics used as a basis for optimizing the models were accuracy and error.

A confusion matrix is a size table n × n which is used to record the predictions made by a classifier with n classes. It provides information about classifier performance by comparing the predicted classes with the actual classes. The confusion matrix includes elements such as True Positive (TP), True Negative (TN), False Positive (FP), and False Negative (FN).

The curve under the curve (AUC) is a score that quantifies a test's ability to accurately classify positive and negative events, with an AUC value of 0.5 corresponding to a random classifier, while AUC values close to 1 indicate that the test is highly sensitive and specific (Iacovacci et al., 2023).

Accuracy (Acc) is useful for measuring the overall performance of the classifier, taking into account the proportion of properly identified samples in relation to the total number of samples.

Precision (P) is a metric that measures the proportion of samples predicted to be in the positive category that are actually in the positive category.

The fraction of samples that actually belong to the positive class and are correctly recognized as positive by the classifier is measured by recall (R), also known as sensitivity or true positive rate. It evaluates the classifier's ability to identify all positive samples.

Specificity (Sp) provides an indication of the classifier's ability to correctly identify positive samples. It is an important metric for tasks where the focus is on minimizing false positives and ensuring the correctness of positive predictions.

F1-score combines recall and accuracy to provide a single assessment of classifier effectiveness. It provides a comprehensive assessment by taking into account both accuracy (ability to accurately identify positive samples) and recall (ability to include all positive samples). It is especially useful when accuracy and recall can contradict each other, such as in unbalanced data sets, or when there is a trade-off between minimizing false positives and false negatives.

GMean is a metric that can reasonably assess the overall performance of the classification of unbalanced datasets (Yan et al., 2024).

According to Everitt & Dunn (2001), the Kappa coefficient (K) is used in this study to measure the confidence of classification. The values generated by the Kappa range up to 1.0 and the image classification was evaluated as follows: 0 - 0.20 = not trust; 0.21 - 0.40 = low; 0.41 - 0.60 = moderate; 0.61 - 0.80 = trust and 0.81 - 1.0 = worthy trust.

Where:

-

q is the number of classes;

-

n represents the total number of considered pixels;

-

Nii are the diagonal elements of the confusion matrix;

-

Ni+ represents the marginal sum of the rows in the confusion matrix, and

-

N+i is the marginal sum of the columns in the confusion matrix.

RESULTS AND DISCUSSION

The quantitative results of the leaf nitrogen analysis of the BRS Estilo cultivar submitted to four nitrogen doses are graphically represented in Figure 4. Mean values of 37.75, 46.68 and 51.48 g kg-1 by applying of 0, 25 and 50 kg ha-1, respectively. The value of 52.25 g kg N was observed when it was applied 75 kg ha-1 of nitrogen fertilization to plants, with a very small difference in relation to the immediately lower dose of 50 kg ha-1.

Boxplot of the leaf nitrogen content of the cultivar BRS Fstilo submitted to nitrogen application.

Data published by (Nunes et al., 2021) presented values similar to those obtained in this study; in the absence of nitrogen fertilization application (0 kg ha-1), a leaf content of 34.3 g kg-1 was observed for the bean cultivar IAC Sintonia.

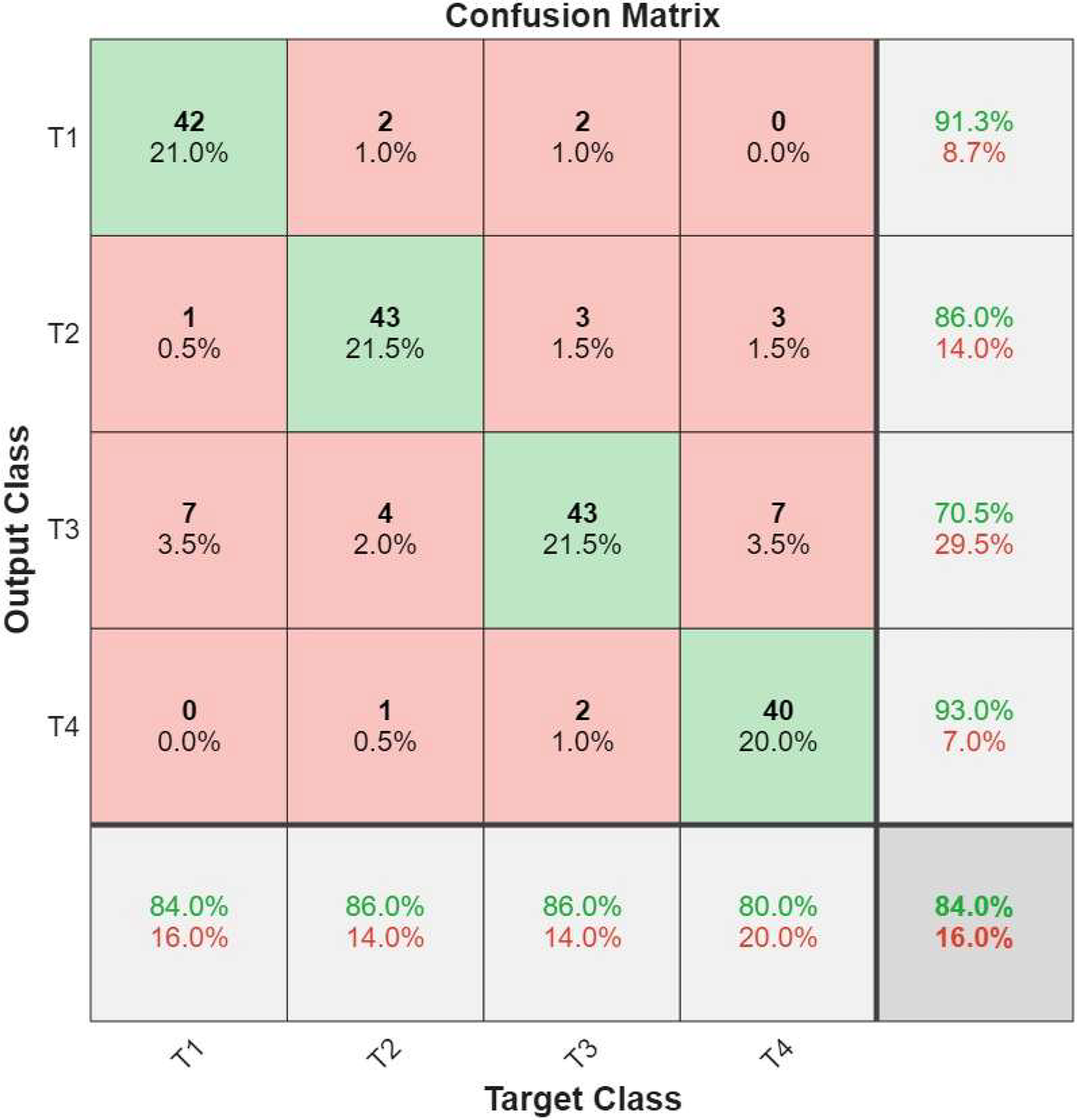

The classification rate using CNN was 82%, with comission errors ranging from 2.5 to 33.8% (Figure 5), showing a reliable performance of this model working with the tested hyperparameters, making it attractive for this task. ResNet-50 expressed higher performance with a classification rate of 84% and comission errors between 7 and 29.5%, promoting a more effective classification of leaf nitrogen levels of the common bean crop (Figure 6). Its high superiority is related to the extraction of patterns from more complex spectral features (Reddy et al., 2023). For both models, lower comission errors were found for the T4 class, probably due to the more intense green color typical of leaves with high nitrogen content.

Confusion matrix of the ResNet-50 model used to classify the leaf N content of the bean crop.

In the work carried out by Kou et al., (2022) aiming to quantify N levels from RGB images collected with UAVs combined with the use of CNN, despite having achieved good results, they detected the need for improvements in the model when applied to this task.

Yi et al., (2020) investigated the potential of five convolutional neural networks (AlexNet, VGG-16, ResNet-101, DenseNet-161 and SqueezeNet1_1) in the classification of N, P and K in the potato crop, starting from a set of 5648 RGB images and concluded that all trained models achieve an accuracy above 87%, with the best performance being achieved by DenseNet-161 (98.4%) and ResNet-50 in second position with 94.9% accuracy.

For a detailed understanding of the use of the two models in the task of classifying the images obtained from the application of different doses of N (T1 = 0 kg ha-1; T2 = 25 kg ha-1; T3 = 50 kg ha-1; T4 = 75 kg ha-1) in the phenological stage V4, the metrics contained in Table 2 demonstrate the performance of the architectures used.

Performance of CNN and ResNet-50 models in the classification of images of bean leaves submitted to nitrogen fertilization.

Based on the Kappa metric, both models were considered good in the task of classifying common beans leaf nitrogen, with ResNet-50 being moderately more efficient (0.787) when compared to CNN (0.761).

Overall, both models exhibited comparable values of AUC, Accuracy, and specificity across the four classes. By taking into account the F1-score - which integrates both precision and recall - a more comprehensive assessment of model performance can be achieved (Chen et al., 2024). In this study, the F1-scores for all four classes using the ResNet-50 architecture were trust (>0.775), demonstrating the model’s strong ability to identify and differentiate between classes. The recall metric for class T2 (0.680) in the CNN model was considerably lower compared to the other classes (T1, T3, and T4), indicating greater difficulty in the classification task. This discrepancy can be attributed to potential noise introduced during the image acquisition process. Various types of noise can be introduced during image capture, which can affect several characteristics of the model's performance (Bezabh et al., 2023).

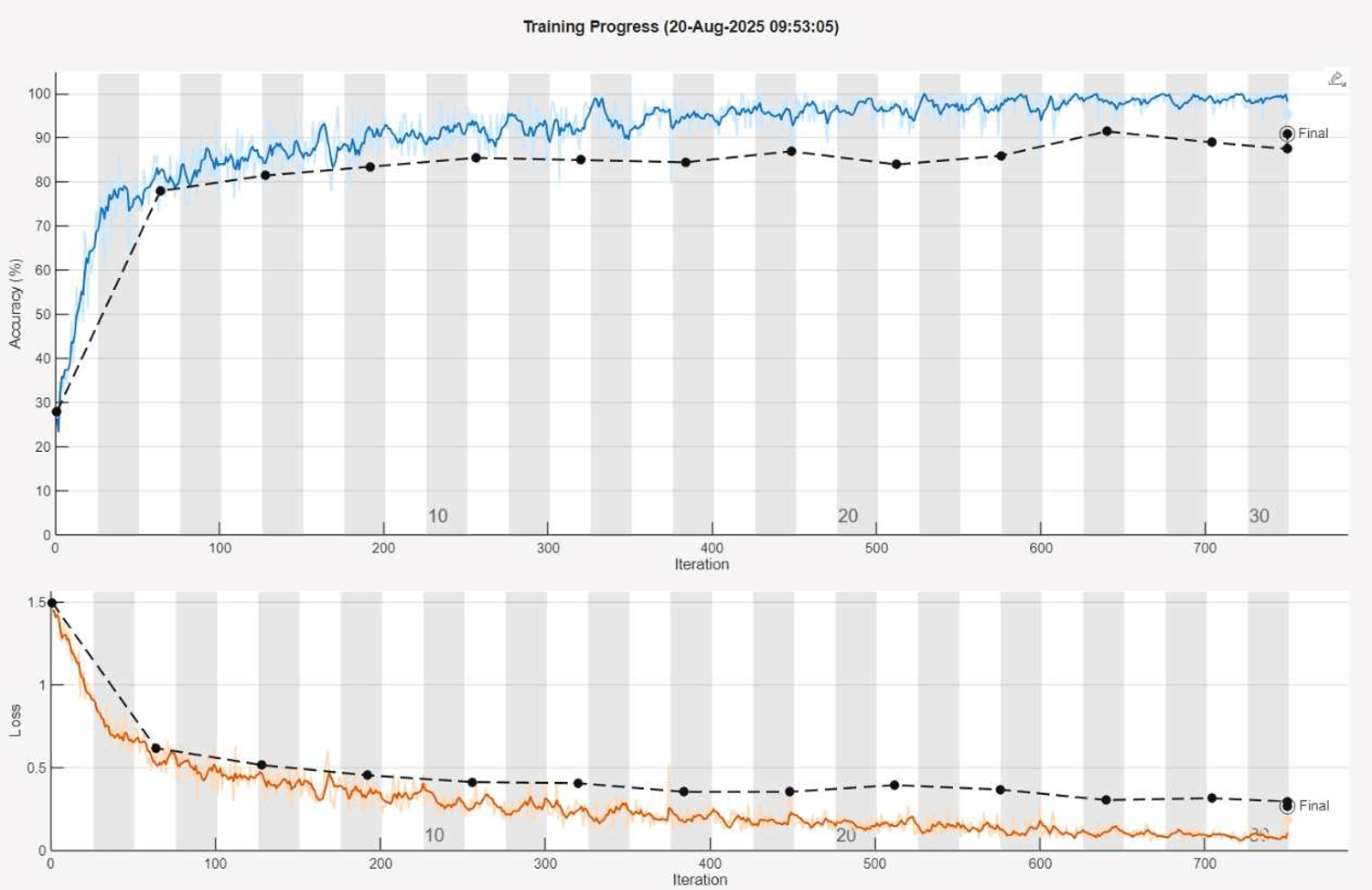

The training process was displayed for CNN and ResNet-50 in figures 7 and 8, respectively, considering accuracy and error as evaluation metrics. Overall, it was observed that in the first four epochs of training, the CNN showed variations in accuracy (validation) between 25% and 72%; the error was higher in the first two epochs, gradually decreasing after the third epoch. During the training process, the final validation accuracy for CNN model was 76.5%.

For ResNet-50, the training value increased linearly between the first and third epochs, rising from 28% to 79%, and the error (training) dropped from 1.5 (first epoch) to 0.62 in the third epoch. During the training process, the final validation accuracy for ResNet-50 was 91%.

CONCLUSIONS

This study evaluated the potential of two Deep Learning architectures using 2D RGB digital images for classification of leaf nitrogen concentration of bean crop. The experiment was carried out with a total of 2000 images and the results revealed superiority in the overall accuracy test of 2 points more of ResNet-50 (84%) when compared to CNN (82%). The superiority of Resnet50 was even more highlighted in the validation of accuracy in the training process (91%), while the CNN architecture reached 76.5%. It was found that the use of images combined with deep learning can be a promising alternative to slow laboratory analyses, optimizing the estimation of leaf N and providing a quick intervention by the producer to achieve higher productivity and less fertilizer waste. Future approaches are encouraged to develop mobile devices capable of handling images using deep learning to classify the nutritional condition of plants in the field.

REFERENCES

-

Alvares, C. A., Stape, J. L., Sentelhas, P. C., de Moraes Gonçalves, J. L., & Sparovek, G. (2013). Köppen's climate classification map for Brazil. Meteorologische Zeitschrift, 22 (6), 711 - 728. https://doi.org/10.1127/0941-2948/2013/0507

» https://doi.org/10.1127/0941-2948/2013/0507 -

Arnob,A. S., Kausik, A. K., Islam, Z., Khan, R., Rashid, A. B. (2025). Comparative result analysis of cauliflower disease classification based on deep learning approach VGG16, inception v3, ResNet, and a custom CNN model. Hybrid Advances, 10. https://doi.org/10.1016/j.hybadv.2025.100440

» https://doi.org/10.1016/j.hybadv.2025.100440 - Askr, H. H., El-Dosuky, M., Darwish, A., & Hassanien, A. E. (2024). Explainable ResNet50 learning model based on copula entropy for cotton plant disease prediction. Applied Soft Computing, 164, 112009.

-

Bezabh, Y. A., Salau, A. O., Abuhayi, B. M., Mussa, A. A., & Ayalew, A. M. (2023). CPD-CCNN: Classification of pepper disease using a concatenation of convolutional neural network models. Scientific Reports. https://doi.org/10.1038/s41598-023-42843-2

» https://doi.org/10.1038/s41598-023-42843-2 -

Bohlol, P., Hosseinpour, S., & Soltani Firouz, M. (2025). Improved food recognition using a refined ResNet50 architecture with improved fully connected layers. Current Research in Food Science, 10, Article 101005. https://doi.org/10.1016/j.crfs.2025.101005

» https://doi.org/10.1016/j.crfs.2025.101005 - Cantarella, H., Quaggio, J. A., Júnior, D. M., Boaretto, R. M., & Raij, B. van. (2022). Boletim 100: recomendações de adubação e calagem para o Estado de São Paulo.

-

Chen, P., Dai, J., Zhang, G., Hou, W., Mu, Z., & Cao, Y. (2024). Diagnosis of Cotton Nitrogen Nutrient Levels Using Ensemble MobileNetV2FC, ResNet101FC, and DenseNet121FC. Agriculture, 14 (4), 525. https://doi.org/10.3390/agriculture14040525

» https://doi.org/10.3390/agriculture14040525 -

CONAB. (2023). Acompanhamento da safra brasileira de grãos. Safra 2023/24, 2 o levantamento. COMPANHIA NACIONAL DE ABASTECIMENTO. https://www.conab.gov.br/info-agro/safras/graos/boletim-da-safra-de-graos

» https://www.conab.gov.br/info-agro/safras/graos/boletim-da-safra-de-graos -

De Ron, A. M., & Rodiño, A. P. (2024). Phaseolus Vulgaris (Beans). Em Reference Module in Life Sciences Elsevier. https://doi.org/10.1016/B978-0-12-822563-9.00181-5

» https://doi.org/10.1016/B978-0-12-822563-9.00181-5 -

Ding, W., Abdel-Basset, M., Alrashdi, I., & Hawash, H. (2024). Next generation of computer vision for plant disease monitoring in precision agriculture: A contemporary survey, taxonomy, experiments, and future direction. Information Sciences, 665, 120338. https://doi.org/10.1016/j.ins.2024.120338

» https://doi.org/10.1016/j.ins.2024.120338 - Everitt, B. S., & Dunn, G. (2001). Applied multivariate data analysis London: Arnold.

-

FAOSTAT. (2023). Crops. https://www.fao.org/faostat/en/#data/QCL

» https://www.fao.org/faostat/en/#data/QCL -

Ghazal, S., Munir, A., & Qureshi, W. S. (2024). Computer vision in smart agriculture and precision farming: Techniques and applications. Artificial Intelligence in Agriculture, 13, 64 - 83. https://doi.org/10.1016/j.aiia.2024.06.004

» https://doi.org/10.1016/j.aiia.2024.06.004 -

Hassan, E., Hossain, M. S., Saber, A., Elmougy, S., Ghoneim, A., & Muhammad, G. (2024). A quantum convolutional network and ResNet (50)-based classification architecture for the MNIST medical dataset. Biomedical Signal Processing and Control, 87, 105560. https://doi.org/https://doi.org/10.1016/j.bspc.2023.105560

» https://doi.org/https://doi.org/10.1016/j.bspc.2023.105560 -

He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 770-778). IEEE. https://doi.org/10.1109/CVPR.2016.90

» https://doi.org/10.1109/CVPR.2016.90 -

Iacovacci, J., Palorini, F., Cicchetti, A., Fiorino, C., & Rancati, T. (2023). Dependence of the AUC of NTCP models on the observational dose-range highlights cautions in comparison of discriminative performance. Physica Medica, 113, 102654. https://doi.org/10.1016/j.ejmp.2023.102654

» https://doi.org/10.1016/j.ejmp.2023.102654 -

Kawasaki, Y., Uga, H., Kagiwada, S., & Iyatomi, H. (2015). Basic Study of Automated Diagnosis of Viral Plant Diseases Using Convolutional Neural Networks (p. 638-645). https://doi.org/10.1007/978-3-319-27863-6_59

» https://doi.org/10.1007/978-3-319-27863-6_59 -

Luo, Y., Cai, X., Qi, J., Guo, D., & Che, W. (2023). FPGA-accelerated CNN for real-time plant disease identification. Computers and Electronics in Agriculture, 207, 107715. https://doi.org/10.1016/j.compag.2023.107715

» https://doi.org/10.1016/j.compag.2023.107715 -

Lynch, J. M., & Barbano, D. M. (1999). Kjeldahl Nitrogen Analysis as a Reference Method for Protein Determination in Dairy Products. Journal of AOAC INTERNATIONAL, 82 (6), 1389-1398. https://doi.org/10.1093/jaoac/82.6.1389

» https://doi.org/10.1093/jaoac/82.6.1389 -

Muoni, T., Barnes, A. P., Öborn, I., Watson, C. A., Bergkvist, G., Shiluli, M., Duncan, A. J. (2019). Farmer perceptions of legumes and their functions in smallholder farming systems in east Africa. International Journal of Agricultural Sustainability, 17(3), 205 - 218. https://doi.org/10.1080/14735903.2019.1609166

» https://doi.org/10.1080/14735903.2019.1609166 -

Nithya, V. P., Mohanasundaram, N., & Santhosh, R. (2024). An early detection and classification of Alzheimer's disease framework based on ResNet-50. Current Medical Imaging, 20, e250823220361. https://doi.org/10.2174/1573405620666230825113344

» https://doi.org/10.2174/1573405620666230825113344 -

Nunes, H. D., Leal, F. T., Mingotte, F. L. C., Damião, V. D., Junior, P. A. C., & Lemos, L. B. (2021). Agronomic performance, quality and nitrogen use efficiency by common bean cultivars. Journal of Plant Nutrition, 44 (7), 995 - 1009. https://doi.org/10.1080/01904167.2020.1849292

» https://doi.org/10.1080/01904167.2020.1849292 -

Oppenheim, D., & Shani, G. (2017). Potato Disease Classification Using Convolution Neural Networks. Advances in Animal Biosciences, 8 (2), 244-249. https://doi.org/10.1017/S2040470017001376

» https://doi.org/10.1017/S2040470017001376 -

Ramos-Ospina, M., Gomez, L., Trujillo, C., & Marulanda-Tobón, A. (2023). Deep Transfer Learning for Image Classification of Phosphorus Nutrition States in Individual Maize Leaves. Electronics, 13 (1), 16. https://doi.org/10.3390/electronics13010016

» https://doi.org/10.3390/electronics13010016 -

Razavi, M., Mavaddati, S., & Koohi, H. (2024). ResNet deep models and transfer learning technique for classification and quality detection of rice cultivars. Expert Systems with Applications, 247, 123276. https://doi.org/10.1016/j.eswa.2024.123276

» https://doi.org/10.1016/j.eswa.2024.123276 -

Reddy, S. R. G., Varma, G. P. S., & Davuluri, R. L. (2023). Resnet-based modified red deer optimization with DLCNN classifier for plant disease identification and classification. Computers and Electrical Engineering, 105, 108492. https://doi.org/10.1016/J.COMPELECENG.2022.108492

» https://doi.org/10.1016/J.COMPELECENG.2022.108492 -

Regazzo, J. R., Silva, T. L. d., Tavares, M. S., Sardinha, E. J. d. S., Figueiredo, C. G., Couto, J. L., Gomes, T. M., Tech, A. R. B., & Baesso, M. M. (2024). Performance of neural networks in the prediction of nitrogen nutrition in strawberry plants. AgriEngineering, 6 (2), 1760 - 1770. https://doi.org/10.3390/agriengineering6020102

» https://doi.org/10.3390/agriengineering6020102 -

Shaheed, K., Qureshi, I., Abbas, F., Jabbar, S., Abbas, Q., Ahmad, H., & Sajid, M. Z. (2023). EfficientRMT-Net-An Efficient ResNet-50 and Vision Transformers Approach for Classifying Potato Plant Leaf Diseases. Sensors, 23 (23), 9516. https://doi.org/10.3390/s23239516

» https://doi.org/10.3390/s23239516 -

Shin, J., Chang, Y. K., Heung, B., Nguyen-Quang, T., Price, G. W., & Al-Mallahi, A. (2021). A deep learning approach for RGB image-based powdery mildew disease detection on strawberry leaves. Computers and Electronics in Agriculture, 183, 106042. https://doi.org/10.1016/J.COMPAG.2021.106042

» https://doi.org/10.1016/J.COMPAG.2021.106042 -

Singh, V., Sharma, N., & Singh, S. (2020). A review of imaging techniques for plant disease detection. Artificial Intelligence in Agriculture, 4, 229-242. https://doi.org/10.1016/j.aiia.2020.10.002

» https://doi.org/10.1016/j.aiia.2020.10.002 -

Sun, W., Fu, B., & Zhang, Z. (2023). Maize Nitrogen Grading Estimation Method Based on UAV Images and an Improved Shufflenet Network. Agronomy, 13 (8), 1974. https://doi.org/10.3390/agronomy13081974

» https://doi.org/10.3390/agronomy13081974 -

Taye, M. M. (2023). Theoretical Understanding of Convolutional Neural Network: Concepts, Architectures, Applications, Future Directions. Computation, 11 (3), 52. https://doi.org/10.3390/computation11030052

» https://doi.org/10.3390/computation11030052 -

Teng, J., Zhang, D., Lee, D. J., & Chou, Y. (2019). Recognition of Chinese food using convolutional neural network. Multimedia Tools and Applications, 78 (9), 11155-11172. https://doi.org/10.1007/s11042-018-6695-9

» https://doi.org/10.1007/s11042-018-6695-9 -

Tran, T.-T., Choi, J.-W., Le, T.-T., & Kim, J.-W. (2019). A Comparative Study of Deep CNN in Forecasting and Classifying the Macronutrient Deficiencies on Development of Tomato Plant. Applied Sciences, 9 (8), 1601. https://doi.org/10.3390/app9081601

» https://doi.org/10.3390/app9081601 -

Wang, J., Zhang, B., Yin, D., & Ouyang, J. (2025). Distribution network fault identification method based on multimodal ResNet with recorded waveform-driven feature extraction. Energy Reports, 13, 90-104. https://doi.org/10.1016/j.egyr.2024.12.012

» https://doi.org/10.1016/j.egyr.2024.12.012 -

Wang, T., Chen, B., Zhang, Z., Li, H., & Zhang, M. (2022). Applications of machine vision in agricultural robot navigation: A review. Computers and Electronics in Agriculture, 198, 107085. https://doi.org/10.1016/j.compag.2022.107085

» https://doi.org/10.1016/j.compag.2022.107085 -

Yamashita, R., Nishio, M., Do, R. K. G., & Togashi, K. (2018). Convolutional neural networks: an overview and application in radiology. Insights into Imaging, 9 (4), 611-629. https://doi.org/10.1007/s13244-018-0639-9

» https://doi.org/10.1007/s13244-018-0639-9 -

Yan, L., Wang, M., Zhou, H., Liu, Y., & Yu, B. (2024). AntiCVP-Deep: Identify anti-coronavirus peptides between different negative datasets based on self-attention and deep learning. Biomedical Signal Processing and Control, 90, 105909. https://doi.org/10.1016/j.bspc.2023.105909

» https://doi.org/10.1016/j.bspc.2023.105909 -

Yi, J., Krusenbaum, L., Unger, P., Hüging, H., Seidel, S. J., Schaaf, G., & Gall, J. (2020). Deep Learning for Non-Invasive Diagnosis of Nutrient Deficiencies in Sugar Beet Using RGB Images. Sensors, 20 (20), 5893. https://doi.org/10.3390/s20205893

» https://doi.org/10.3390/s20205893 -

Yu, H., Yang, F., Hu, C., Yang, X., Zheng, A., Wang, Y., Tang, Y., He, Y., & Lv, M. (2023). Production status and research advancement on root rot disease of faba bean (Vicia faba L.) in China. Frontiers in Plant Science, 14. https://doi.org/10.3389/fpls.2023.1165658

» https://doi.org/10.3389/fpls.2023.1165658 -

Zhou, Y., Wang, Z., Zheng, S., Zhou, L., Dai, L., Luo, H., Zhang, Z., & Sui, M. (2024). Optimization of automated garbage recognition model based on ResNet-50 and weakly supervised CNN for sustainable urban development. Alexandria Engineering Journal, 108, 415-427. https://doi.org/10.1016/j.aej.2024.07.066

» https://doi.org/10.1016/j.aej.2024.07.066

-

DATA AVAILABILITY STATEMENT

The datasets generated during and/or analyzed during the current study are not publicly available due [use for doctoral writing and related publications] but are available from the corresponding author on reasonable request.

-

FUNDING:

This research was funded by Coordination for the Improvement of Higher Education Personnel (CAPES), Brazil (Funding Code 001)

Edited by

-

Area Editor:

Tatiana Fernanda Canata

Data availability

The datasets generated during and/or analyzed during the current study are not publicly available due [use for doctoral writing and related publications] but are available from the corresponding author on reasonable request.

Publication Dates

-

Publication in this collection

06 Oct 2025 -

Date of issue

2025

History

-

Received

19 Feb 2025 -

Accepted

22 Aug 2025

LEAF NITROGEN CLASSIFICATION OF COMMON BEAN (PHASEOLUS VULGARIS L.) USING DEEP LEARNING MODELS AND IMAGES

LEAF NITROGEN CLASSIFICATION OF COMMON BEAN (PHASEOLUS VULGARIS L.) USING DEEP LEARNING MODELS AND IMAGES