Abstracts

The essay contrasts the scientific approach to analyzing and making decisions about risk with the ways that ordinary people perceive and respond to risk. It highlights the importance of trust as a determiner of perceived risk. It describes relatively new research on "risk as feelings" and the "Affect Heuristic". Finally reflects on the importance of this work for risk communication and concludes with some observations about human rationality and irrationality in the face of risk.

Evaluation of Risk; Risk Perception; Human Judgment and Decision Making

Trata-se de um ensaio que compara a abordagem científica da análise e tomada de decisão sobre riscos com a maneira como pessoas comuns percebem e respondem aos riscos. Aborda a importância da confiança como um determinante do risco percebido e descreve nova pesquisa sobre "risco como sentimentos", o "Efeito Heurístico" e a relevância desse novo trabalho. O conceito de risco é extremamente complexo, pois, além dos fatores científicos, está intrinsecamente associado a elementos sociais e sua percepção. Os riscos envolvem muitas incertezas de difícil medição. Outra questão importante é a amplificação social do risco. A confiança é a palavra-chave para problemas de comunicação sobre o risco. A conclusão é feita ao redor do tema da racionalidade e irracionalidade humana face ao risco.

Avaliação de risco; Percepção de riscos; Julgamento e tomada de decisão

PARTE I - ARTIGOS

The Psychology of risk1 1 This paper is based on SLOVIC, P. "Trust, emotion, sex, politics, and science: Surveying the risk-assessment battlefield." In: BAZERMAN, M. H. et al. (eds.). Environment, ethics, and behavior. San Francisco: New Lexington, 1997. p. 277-313.

Psicologia do risco

Paul Slovic

Psychologist, PhD at University of Michigan. Professor at Psychology Department of Oregon University, USA. President of Decision Research. Member of Society for Risk Analysis and American Psychological Association. Address: Decision Research. Psychology Department, University of Oregon, Eugene, Oregon 97403. E-mail:pslovic@uoregon.edu

ABSTRACT

The essay contrasts the scientific approach to analyzing and making decisions about risk with the ways that ordinary people perceive and respond to risk. It highlights the importance of trust as a determiner of perceived risk. It describes relatively new research on "risk as feelings" and the "Affect Heuristic". Finally reflects on the importance of this work for risk communication and concludes with some observations about human rationality and irrationality in the face of risk.

Keywords: Evaluation of Risk; Risk Perception; Human Judgment and Decision Making.

RESUMO

Trata-se de um ensaio que compara a abordagem científica da análise e tomada de decisão sobre riscos com a maneira como pessoas comuns percebem e respondem aos riscos. Aborda a importância da confiança como um determinante do risco percebido e descreve nova pesquisa sobre "risco como sentimentos", o "Efeito Heurístico" e a relevância desse novo trabalho. O conceito de risco é extremamente complexo, pois, além dos fatores científicos, está intrinsecamente associado a elementos sociais e sua percepção. Os riscos envolvem muitas incertezas de difícil medição. Outra questão importante é a amplificação social do risco. A confiança é a palavra-chave para problemas de comunicação sobre o risco. A conclusão é feita ao redor do tema da racionalidade e irracionalidade humana face ao risco.

Palavras-chave: Avaliação de risco; Percepção de riscos; Julgamento e tomada de decisão.

Introduction

Ironically, as our society and other industrialized nations have expended great effort to make life safer and healthier, many in the public have become more, rather than less, concerned about risk. These individuals see themselves as exposed to more serious risks than were faced by people in the past, and they believe that this situation is getting worse rather than better. Nuclear and chemical technologies (except for medicines) have been stigmatized by being perceived as entailing unnaturally great risks (Gregory et al., 1995). As a result, it has been difficult, if not impossible, to find host sites for disposing of high-level or low-level radioactive wastes, or for incinerators, landfills, and other chemical facilities.

Public perceptions of risk have been found to determine the priorities and legislative agendas of regulatory bodies such as the Environmental Protection Agency, much to the distress of agency technical experts who argue that other hazards deserve higher priority. The bulk of EPA's budget in recent years has gone to hazardous waste primarily because the public believes that the cleanup of Superfund sites is one of the most serious environmental priorities for the country. Hazards such as indoor air pollution are considered more serious health risks by experts but are not perceived that way by the public (United States, 1987).

Great disparities in monetary expenditures designed to prolong life, as shown by Tengs et al. (1995), may also be traced to public perceptions of risk. Such discrepancies are seen as irrational by many harsh critics of public perceptions. These critics draw a sharp dichotomy between the experts and the public. Experts are seen as purveying risk assessments, characterized as objective, analytic, wise, and rational-based on the In contrast, the public is seen to rely on that are subjective, often hypothetical, emotional, foolish, and irrational (see, e.g. Covello et al., 1983; DuPont, 1980). Weiner (1993) defends this dichotomy, arguing that "This separation of reality and perception is pervasive in a technically sophisticated society, and serves to achieve a necessary emotional distance . . ." (p. 495).

In sum, polarized views, controversy, and overt conflict have become pervasive within risk assessment and risk management. A desperate search for salvation through risk-communication efforts began in the mid-l980s-yet, despite some localized successes, this effort has not stemmed the major conflicts or reduced much of the dissatisfaction with risk management. This dissatisfaction can be traced, in part, to a failure to appreciate the complex and socially determined nature of the concept "risk." In the remainder of this paper, I shall describe several streams of research that demonstrate this complexity and point toward the need for new definitions of risk and new approaches to risk management.

The Subjective and Value-Laden Nature of Risk Assessment

Attempts to manage risk must confront the question: "What is risk?" The dominant conception views risk as "the chance of injury, damage, or loss" (Webster, 1983). The probabilities and consequences of adverse events are assumed to be produced by physical and natural processes in ways that can be objectively quantified by risk assessment. Much social science analysis rejects this notion, arguing instead that risk is inherently subjective (Funtowicz and Ravetz, 1992; Krimsky and Golding, 1992; Otway, 1992; Pidgeon et al., 1992; Slovic, 1992; Wynne, 1992). In this view, risk does not exist "out there," independent of our minds and cultures, waiting to be measured. Instead, human beings have invented the concept to help them understand and cope with the dangers and uncertainties of life. Although these dangers are real, there is no such thing as "real risk" or "objective risk." The nuclear engineer's probabilistic risk estimate for a nuclear accident or the toxicologist's quantitative estimate of a chemical's carcinogenic risk are both based on theoretical models, whose structure is subjective and assumption-laden, and whose inputs are dependent on judgment. As we shall see, nonscientists have their own models, assumptions, and subjective assessment techniques (intuitive risk assessments), which are sometimes very different from the scientists' models.

One way in which subjectivity permeates risk assessments is in the dependence of such assessments on judgments at every stage of the process, from the initial structuring of a risk problem to deciding which endpoints or consequences to include in the analysis, identifying and estimating exposures, choosing dose-response relationships, and so on. For example, even the apparently simple task of choosing a risk measure for a well-defined endpoint such as human fatalities is surprisingly complex and judgmental. Table 1 shows a few of the many different ways that fatality risks can be measured. How should we decide which measure to use when planning a risk assessment, recognizing that the choice is likely to make a big difference in how the risk is perceived and evaluated?

An example taken from Crouch and Wilson (1982), demonstrates how the choice of one measure or another can make a technology look either more or less risky. For example, between 1950 and 1970, coal mines became much less risky in terms of deaths from accidents per ton of coal, but they became marginally riskier in terms of deaths from accidents per employee. Which measure one thinks more appropriate for decision making depends on one's point of view. From a national point of view, given that a certain amount of coal has to be obtained to provide fuel, deaths per million tons of coal is the more appropriate measure of risk, whereas from a labor leader's point of view, deaths per thousand persons employed may be more relevant.

Each way of summarizing deaths embodies its own set of values (National Research Council, 1989). For example, "reduction in life expectancy" treats deaths of young people as more important than deaths of older people, who have less life expectancy to lose. Simply counting fatalities treats deaths of the old and young as equivalent; it also treats as equivalent deaths that come immediately after mishaps and deaths that follow painful and debilitating disease. Using "number of deaths" as the summary indicator of risk implies that it is as important to prevent deaths of people who engage in an activity by choice and have been benefiting from that activity as it is to protect those who are exposed to a hazard involuntarily and get no benefit from it. One can easily imagine a range of arguments to justify different kinds of unequal weightings for different kinds of deaths, but to arrive at any selection requires a value judgment concerning which deaths one considers most undesirable. To treat the deaths as equal also involves a value judgment.

The Multidimensionality of Risk

As will be shown in the next section, research has found that the public has a broad conception of risk, qualitative and complex, that incorporates considerations such as uncertainty, dread, catastrophic potential, controllability, equity, risk to future generations, and so forth, into the risk equation (Slovic, 1987). In contrast, experts' perceptions of risk are not closely related to these dimensions or the characteristics that underlie them. Instead, studies show that experts tend to see riskiness as synonymous with probability of harm or expected mortality, consistent with the ways that risks tend to be characterized in risk assessments (see, for example, Cohen, 1985). As a result of these different perspectives, many conflicts over "risk" may result from experts and laypeople having different definitions of the concept. In this light, it is not surprising that expert recitations of "risk statistics" often do little to change people's attitudes and perceptions.

There are legitimate, value-laden issues underlying the multiple dimensions of public risk perceptions, and these values need to be considered in risk-policy decisions. For example, is risk from cancer (a dreaded disease) worse than risk from auto accidents (not dreaded)? Is a risk imposed on a child more serious than a known risk accepted voluntarily by an adult? Are the deaths of 50 passengers in separate automobile accidents equivalent to the deaths of 50 passengers in one airplane crash? Is the risk from a polluted Superfund site worse if the site is located in a neighborhood that has a number of other hazardous facilities nearby? The difficult questions multiply when outcomes other than human health and safety are considered.

Studying Risk Perceptions: the psychometric paradigm

Just as the physical, chemical, and biological processes that contribute to risk or reduce risk can be studied scientifically, so can the processes affecting risk perceptions.

One broad strategy for studying perceived risk is to develop a taxonomy for hazards that can be used to understand and predict responses to their risks. A taxonomic scheme might explain, for example, people's extreme aversion to some hazards, their indifference to others, and the discrepancies between these reactions and experts' opinions. The most common approach to this goal has employed the psychometric paradigm (Fischhoff et al., 1978; Slovic et al., 1984), which uses psychophysical scaling and multivariate analysis techniques to produce quantitative representations of risk attitudes and perceptions. Within the psychometric paradigm, people make quantitative judgments about the current and desired riskiness of diverse hazards and the desired level of regulation of each. These judgments are then related to judgments about other properties, such as (i) the hazard's status on characteristics that have been hypothesized to account for risk perceptions and attitudes (for example, voluntariness, dread, knowledge, controllability), (ii) the benefits that each hazard provides to society, (iii) the number of deaths caused by the hazard in an average year, (iv) the number of deaths caused by the hazard in a disastrous year, and (v) the seriousness of each death from a particular hazard relative to a death due to other causes.

Numerous studies carried out within the psychometric paradigm have shown that perceived risk is quantifiable and predictable. Psychometric techniques seem well suited for identifying similarities and differences among groups with regard to risk perceptions and attitudes (see Table 2). They have also shown that the concept "risk" means different things to different people. When experts judge risk, their responses correlate highly with technical estimates of annual fatalities. Lay people can assess annual fatalities if they are asked to (and produce estimates somewhat like the technical estimates). However, their judgments of risk are related more to other hazard characteristics (for example, catastrophic potential, threat to future generations) and, as a result, tend to differ from their own (and experts') estimates of annual fatalities.

Various models have been advanced to represent the relationships between perceptions, behavior, and these qualitative characteristics of hazards. As we shall see, the picture that emerges from this work is both orderly and complex.

Factor-analytic representations. Psychometric studies have demonstrated that every hazard has a unique pattern of qualities that appears to be related to its perceived risk. Figure 1 shows the mean profiles across nine characteristic qualities of risk that emerged for nuclear power and medical x-rays in an early study (Fischhoff et al., 1978). Nuclear power was judged to have much higher risk than x-rays and to need much greater reduction in risk before it would become "safe enough." As the figure illustrates, nuclear power also had a much more negative profile across the various risk characteristics.

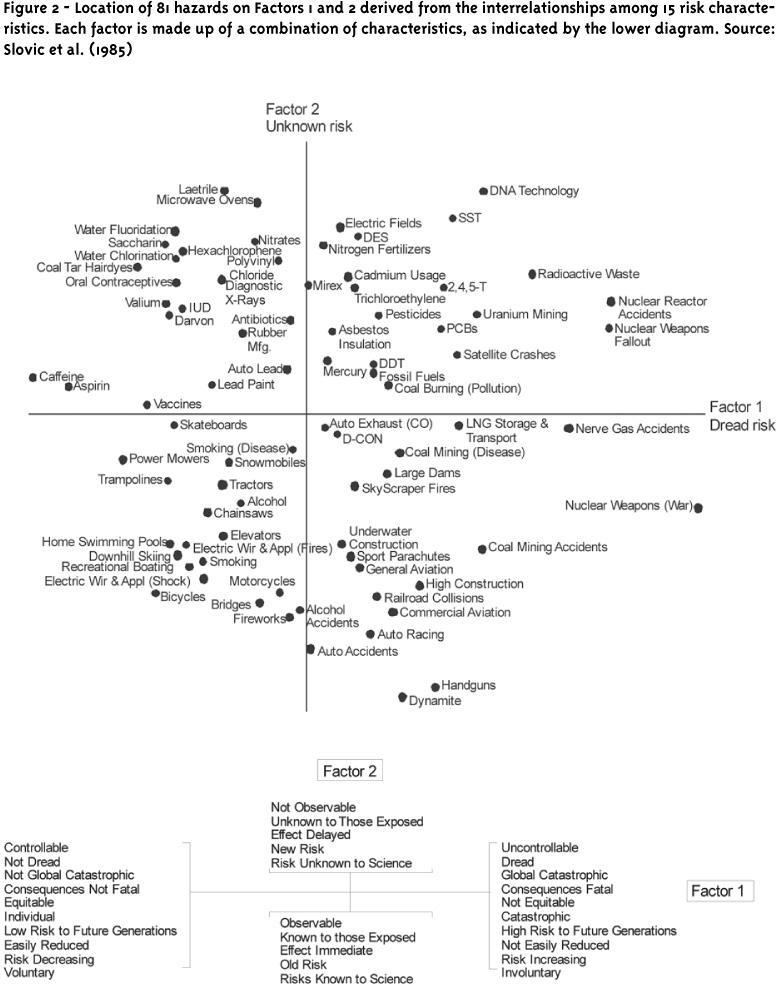

Many of the qualitative risk characteristics that make up a hazard's profile tend to be highly correlated with each other, across a wide range of hazards. For example, hazards rated as "voluntary" tend also to be rated as "controllable" and "well-known;" haz-ards that appeared to threaten future generations tend also to be seen as having catastrophic potential, and so on. Investigation of these interrelationships by means of factor analysis has indicated that the broader domain of characteristics can be condensed to a small set of higher-order characteristics or factors.

The factor space presented in Figure 2 has been replicated across groups of lay people and experts judging large and diverse sets of hazards. Factor 1, labeled "dread risk," is defined at its high (right hand) end of perceived lack of control, dread, catastrophic potential, fatal consequences, and the inequitable distribution of risks and benefits. Nuclear weapons and nuclear power score highest on the characteristics that make up this factor. Factor 2, labeled "unknown risk," is defined at its high end by hazards judged to be unobservable, unknown, new, and delayed in their manifestation of harm. Chemical and DNA technologies score particularly high on this factor. A third factor, reflecting the number of people exposed to the risk, has been obtained in several studies.

Research has shown that laypeople's risk perceptions and attitudes are closely related to the position of a hazard within the factor space. Most important is the factor "Dread Risk." The higher a hazard's score on this factor (i.e., the further to the right it appears in the space), the higher its perceived risk, the more people want to see its current risks reduced, and the more they want to see strict regulation employed to achieve the desired reduction in risk. In contrast, experts' perceptions of risk are not closely related to any of the various risk characteristics or factors derived from these characteristics. Instead, experts appear to see riskiness as synonymous with expected annual mortality (Slovic et al., 1979). As a result, many conflicts about risk may result from experts and laypeople having different definitions of the concept.

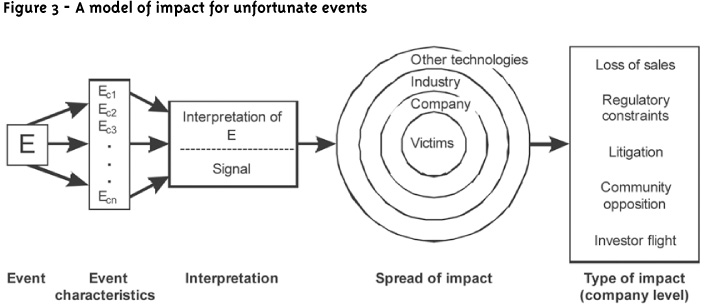

Perceptions Have Impacts: the social amplification of risk

Perceptions of risk play a key role in a process labeled social amplification of risk (Kasperson et al., 1988). Social amplification is triggered by the occurrence of an adverse event, which could be a major or minor accident, a discovery of pollution, an outbreak of disease, an incident of sabotage, and so on. Risk amplification reflects the fact that the adverse impacts of such an event sometimes extend far beyond the direct damages to victims and property and may result in massive indirect impacts such as litigation against a company or loss of sales, increased regulation of an industry, and so on. In some cases, all companies within an industry are affected, regardless of which company was responsible for the mishap. Thus, the event can be thought of as a stone dropped in a pond. The ripples spread outward, encompassing first the directly affected victims, then the responsible company or agency, and, in the extreme, reaching other companies, agencies, or industries (See Figure 3). Examples of events resulting in extreme higher-order impacts include the chemical manufacturing accident at Bhopal, India, the disastrous launch of the space shuttle Challenger, the nuclear-reactor accidents at Three Mile Island and Chernobyl, the adverse effects of the drug Thalidomide, the Exxon Valdez oil spill, the adulteration of Tylenol capsules with cyanide, and, most recently, the deaths of several individuals from anthrax. An important feature of social amplification is that the direct impacts need not be too large to trigger major indirect impacts. The seven deaths due to the Tylenol tampering resulted in more than 125,000 stories in the print media alone and inflicted losses of more than one billion dollars upon the Johnson & Johnson Company, due to the damaged image of the product (Mitchell, 1989). The cost of dealing with the anthrax threat will be far greater than this.

It appears likely that multiple mechanisms contribute to the social amplification of risk. First, extensive media coverage of an event can contribute to heightened perceptions of risk and amplified impacts (Burns et al., 1990). Second, a particular hazard or mishap may enter into the agenda of social groups, or what Mazur (1981) terms the partisans, within the community or nation. The attack on the apple growth-regulator "Alar" by the Natural Resources Defense Council demonstrates the important impacts that special-interest groups can trigger (Moore, 1989).

A third mechanism of amplification arises out of the interpretation of unfortunate events as clues or signals regarding the magnitude of the risk and the adequacy of the risk-management process (Burns et al., 1990; Slovic, 1987). The informativeness or signal potential of a mishap, and thus its potential social impact, appears to be systematically related to the perceived characteristics of the hazard. An accident that takes many lives may produce relatively little social disturbance (beyond that caused to the victims' families and friends) if it occurs as part of a familiar and well-understood system (e.g., a train wreck). However, a small incident in an unfamiliar system (or one perceived as poorly understood), such as a nuclear waste repository or a recombinant DNA laboratory, may have immense social consequences if it is perceived as a harbinger of future and possibly catastrophic mishaps.

One implication of the signal concept is that effort and expense beyond that indicated by a cost-benefit analysis might be warranted to reduce the possibility of "high-signal events." Unfortunate events involving hazards in the upper right quadrant of Figure 2 appear particularly likely to have the potential to produce large ripples. As a result, risk analyses involving these hazards need to be made sensitive to these possible higher order impacts. Doing so would likely bring greater protection to potential victims as well as to companies and industries.

Sex, Politics, and Emotion in Risk Judgments

Given the complex and subjective nature of risk, it should not surprise us that many interesting and provocative things occur when people judge risks. Recent studies have shown that factors such as gender, race, political worldviews, affiliation, emotional affect, and trust are strongly correlated with risk judgments. Equally important is that these factors influence the judgments of experts as well as the judgments of laypersons.

Sex

Sex is strongly related to risk judgments and attitudes. Several dozen studies have documented the finding that men tend to judge risks as smaller and less problematic than do women. A number of hypotheses have been put forward to explain these differences in risk perception. One approach has been to focus on biological and social factors. For example, women have been characterized as more concerned about human health and safety because they give birth and are socialized to nurture and maintain life (Steger and Witt, 1989). They have been characterized as physically more vulnerable to violence, such as rape, for example, and this may sensitize them to other risks (Baumer, 1978; Riger et al., 1978). The combination of biology and social experience has been put forward as the source of a "different voice" that is distinct to women (Gilligan, 1982; Merchant, 1980).

A lack of knowledge and familiarity with science and technology has also been suggested as a basis for these differences, particularly with regard to nuclear and chemical hazards. Women are discouraged from studying science and there are relatively few women scientists and engineers (Alper, 1993). However, Barke et al., (1997) have found that female physical scientists judge risks from nuclear technologies to be higher than do male physical scientists. Similar results with scientists were obtained by Slovic et al., (1997) who found that female members of the British Toxicological Society were far more likely than male toxicologists to judge societal risks as moderate or high. Certainly the female scientists in these studies cannot be accused of lacking knowledge and technological literacy. Something else must be going on.

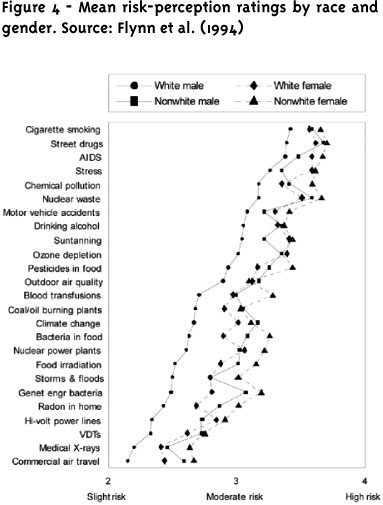

Hints about the origin of these sex differences come from a study by Flynn et al., (1994) in which 1,512 Americans were asked, for each of 25 hazard items, to indicate whether the hazard posed (1) little or no risk, (2) slight risk, (3) moderate risk, or (4) high risk to society. The percentage of "high-risk" responses was greater for women on every item. Perhaps the most striking result from this study is shown in Figure 4, which presents the mean risk ratings separately for White males, White females, non-White males, and non-White females. Across the 25 hazards, White males produced risk-perception ratings that were consistently much lower than the means of the other three groups.

Although perceived risk was inversely related to income and educational level, controlling for these differences statistically did not reduce much of the White-male effect on risk perception.

When the data underlying Figure 4 were examined more closely, Flynn et al. observed that not all White males perceived risks as low. The "White-male effect" appeared to be caused by about 30% of the White-male sample who judged risks to be extremely low. The remaining White males were not much different from the other subgroups with regard to perceived risk.

What differentiated these White males who were most responsible for the effect from the rest of the sample, including other White males who judged risks as relatively high? When compared to the remainder of the sample, the group of White males with the lowest risk-perception scores were better educated (42.7% college or postgraduate degree vs. 26.3% in the other group), had higher household incomes (32.1% above $50,000 vs. 21.0%), and were politically more conservative (48.0% conservative vs. 33.2%).

Particularly noteworthy is the finding that the low risk-perception subgroup of White males also held very different attitudes from the other respondents. Specifically, they were than the others to:

Agree that future generations can take care of themselves when facing risks imposed on them from today's technologies (64.2% vs. 46.9%).

Agree that if a risk is very small it is okay for society to impose that risk on individuals without their consent (31.7% vs. 20.8%).

Agree that science can settle differences of opinion about the risks of nuclear power (61.8% vs. 50.4%).

Agree that government and industry can be trusted with making the proper decisions to manage the risks from technology (48.0% vs. 31.1 %).

Agree that we can trust the experts and engineers who build, operate, and regulate nuclear power plants (62.6% vs. 39.7%).

Agree that we have gone too far in pushing equal rights in this country (42.7% vs. 30.9%).

Agree with the use of capital punishment (88.2% vs. 70.5%).

Disagree that technological development is destroying nature (56.9% vs. 32.8%).

Disagree that they have very little control over risks to their health (73.6% vs. 63.1%).

Disagree that the world needs a more equal distribution of wealth (42.7% vs. 31.3%).

Disagree that local residents should have the authority to close a nuclear power plant if they think it is not run properly (50.4% vs. 25.1%).

Disagree that the public should vote to decide on issues such as nuclear power (28.5% vs. 16.7%).

In sum, the subgroup of White males who perceive risks to be quite low can be characterized by trust in institutions and authorities and by anti-egalitarian attitudes, including a disinclination toward giving decision-making power to citizens in areas of risk management.

The results of this study raise new questions. What does it mean for the explanations of gender differences when we see that the sizable differences between White males and White females do not exist for non-White males and non-White females? Why do a substantial percentage of White males see the world as so much less risky than everyone else sees it?

Obviously, the salience of biology is reduced by these data on risk perception and race. Biological factors should apply to non-White men and women as well as to White men and women. The present data thus move us away from biology and toward sociopolitical explanations. Perhaps White males see less risk in the world because they create, manage, control, and benefit from many of the major technologies and activities. Perhaps women and non-White men see the world as more dangerous because in many ways they are more vulnerable, because they benefit less from many of its technologies and institutions, and because they have less power and control over what happens in their communities and their lives. Although the survey conducted by Flynn, Slovic, and Mertz was not designed to test these alternative explanations, the race and gender differences in perceptions and attitudes point toward the role of power, status, alienation, trust, perceived government responsiveness, and other sociopolitical factors in determining perception and acceptance of risk.

To the extent that these sociopolitical factors shape public perception of risks, we can see why traditional attempts to make people see the world as White males do, by showing them statistics and risk assessments, are often unsuccessful. The problem of risk conflict and controversy goes beyond science. It is deeply rooted in the social and political fabric of our society.

Risk Perception, Emotion, and Affect

The studies described in the preceding section illustrate the role of worldviews as orienting mechanisms. Research suggests that emotion is also an orienting mechanism that directs fundamental psychological processes such as attention, memory, and information processing. Emotion and worldviews may thus be functionally similar in that both may help us navigate quickly and efficiently through a complex, uncertain, and sometimes dangerous world.

The discussion in this section is concerned with a subtle form of emotion called affect, defined as a positive (like) or negative (dislike) evaluative feeling toward an external stimulus (e.g., some hazard such as cigarette smoking). Such evaluations occur rapidly and automatically-note how quickly you sense a negative affective feeling toward the stimulus word "hate" or the word "cancer."

Support for the conception of affect as an orienting mechanism comes from a study by Alhakami and Slovic (1994). They observed that, whereas the risks and benefits to society from various activities and technologies (e.g., nuclear power, commercial aviation) tend to be associated in the world, they are correlated in people's minds (higher perceived benefit is associated with lower perceived risk; lower perceived benefit is associated with higher perceived risk). Alhakami and Slovic found that this inverse relationship was linked to people's reliance on general affective evaluations when making risk/benefit judgments. When the affective evaluation was favorable (as with automobiles, for example), the activity or technology being judged was seen as having high benefit and low risk; when the evaluation was unfavorable (e.g., as with pesticides), risks tended to be seen as high and benefits as low. It thus appears that the affective response is primary, and the risk and benefit judgments are derived (at least partly) from it.

Finucane et al. (2000) investigated the inverse relationship between risk and benefit judgments under a time-pressure condition designed to limit the use of analytic thought and enhance the reliance on affect. As expected, the inverse relationship was strengthened when time pressure was introduced. A second study tested and confirmed the hypothesis that providing information designed to alter the favorability of one's overall affective evaluation of an item (say nuclear power) would systematically change the risk and benefit judgments for that item. For example, providing information calling people's attention to the benefits provided by nuclear power (as a source of energy) depressed people's perception of the risks of that technology. The same sort of reduction in perceived risk occurred for food preservatives and natural gas, when information about their benefits was provided. Information about risk was also found to alter perception of benefit. A model depicting how reliance upon affect can lead to these observed changes in perception of risk and benefit is shown in Figure 5.

Slovic et al. (1991a, b) studied the relationship between affect and perceived risk for hazards related to nuclear power. For example, Slovic, Flynn, and Layman asked respondents "What is the first thought or image that comes to mind when you hear the phrase 'nuclear waste repository?"' After providing up to three associations to the repository stimulus, each respondent rated the affective quality of these associations on a five-point scale, ranging from extremely negative to extremely positive.

Although most of the images that people evoke when asked to think about nuclear power or nuclear waste are affectively negative (e.g., death, destruction, war, catastrophe), some are positive (e.g., abundant electricity and the benefits it brings). The affective values of these positive and negative images appear to sum in a way that is predictive of our attitudes, perceptions, and behaviors. If the balance is positive, we respond favorably; if it is negative, we respond unfavorably. For example, the affective quality of a person's associations to a nuclear waste repository was found to be related to whether the person would vote for or against a referendum on a nuclear waste repository and to their judgments regarding the risk of a repository accident. Specifically, more than 90% of those people whose first image was judged very negative said that they would vote against a repository in Nevada; fewer than 50% of those people whose first image was positive said they would vote against the repository (Slovic et al., 1991a).

Using data from the national survey of 1,500 Americans described earlier, Peters and Slovic (1996) found that the affective ratings of associations to the stimulus "nuclear power" were highly predictive of responses to the question: "If your community was faced with a shortage of electricity, do you agree or disagree that a new nuclear power plant should be built to supply that electricity?" Among the 25% of respondents with the most positive associations to nuclear power, 69% agreed to building a new plant. Among the 25% of respondents with the most negative associations, only 13% agreed.

The Importance of Trust

The research described above has painted a portrait of risk perception influenced by the interplay of psychological, social, and political factors. Members of the public and experts can disagree about risk because they define risk differently, have different worldviews, different affective experiences and reactions, or different social status. Another reason why the public often rejects scientists' risk assessments is lack of trust. Trust in risk management, like risk perception, has been found to correlate with gender, race, worldviews, and affect.

Social relationships of all types, including risk management, rely heavily on trust. Indeed, much of the contentiousness that has been observed in the risk-management arena has been attributed to a climate of distrust that exists between the public, industry, and risk-management professionals (e.g., Slovic, 1993; Slovic et al., 1991a). The limited effectiveness of risk-communication efforts can be attributed to the lack of trust. If you trust the risk manager, communication is relatively easy. If trust is lacking, no form or process of communication will be satisfactory (Fessenden-Raden et al., 1987).

How Trust Is Created and Destroyed

One of the most fundamental qualities of trust has been known for ages. Trust is fragile. It is typically created rather slowly, but it can be destroyed in an instant-by a single mishap or mistake. Thus, once trust is lost, it may take a long time to rebuild it to its former state. In some instances, lost trust may never be regained. Abraham Lincoln understood this quality. In a letter to Alexander McClure, he observed: "If you forfeit the confidence of your fellow citizens, you can regain their respect and esteem" [italics added].

The fact that trust is easier to destroy than to create reflects certain fundamental mechanisms of human psychology called here "the asymmetry principle." When it comes to winning trust, the playing field is not level. It is tilted toward distrust, for each of the following reasons:

1. Negative (trust-destroying) events are more visible or noticeable than positive (trust-building) events. Negative events often take the form of specific, well-defined incidents such as accidents, lies, discoveries of errors, or other mismanagement. Positive events, while sometimes visible, more often are fuzzy or indistinct. For example, how many positive events are represented by the safe operation of a nuclear power plant for one day? Is this one event? Dozens of events? Hundreds? There is no precise answer. When events are invisible or poorly defined, they carry little or no weight in shaping our attitudes and opinions.

2. When events are well-defined and do come to our attention, negative (trust-destroying) events carry much greater weight than positive events (Slovic, 1993).

3. Adding fuel to the fire of asymmetry is yet another idiosyncrasy of human psychology-sources of bad (trust-destroying) news tend to be seen as more credible than sources of good news. The findings reported in Section 3.4 regarding "intuitive toxicology" illustrate this point. In general, confidence in the validity of animal studies is not particularly high. However, when told that a study has found that a chemical is carcinogenic in animals, members of the public express considerable confidence in the validity of this study for predicting health effects in humans.2

4. Another important psychological tendency is that distrust, once initiated, tends to reinforce and perpetuate distrust. Distrust tends to inhibit the kinds of personal contacts and experiences that are necessary to overcome distrust. By avoiding others whose motives or actions we distrust, we never get to see that these people are competent, well-meaning, and trustworthy.

"The System Destroys Trust"

Thus far we have been discussing the psychological tendencies that create and reinforce distrust in situations of risk. Appreciation of those psychological principles leads us toward a new perspective on risk perception, trust, and conflict. Conflicts and controversies surrounding risk management are not due to public irrationality or ignorance but, instead, can be seen as expected side effects of these psychological tendencies, interacting with a highly participatory democratic system of government and amplified by certain powerful technological and social changes in society. Technological change has given the electronic and print media the capability (effectively utilized) of informing us of news from all over the world-often right as it happens. Moreover, just as individuals give greater weight and attention to negative events, so do the news media. Much of what the media reports is bad (trust-destroying) news (Lichtenberg and MacLean, 1992).

A second important change, a social phenomenon, is the rise of powerful special interest groups, well-funded (by a fearful public) and sophisticated in using their own experts and the media to communicate their concerns and their distrust to the public to influence risk policy debates and decisions (Fenton, 1989). The social problem is compounded by the fact that we tend to manage our risks within an adversarial legal system that pits expert against expert, contradicting each other's risk assessments and further destroying the public trust.

The young science of risk assessment is too fragile, too indirect, to prevail in such a hostile atmosphere. Scientific analysis of risks cannot allay our fears of low-probability catastrophes or delayed cancers unless we trust the system. In the absence of trust, science (and risk assessment) can only feed public concerns, by uncovering more bad news. A single study demonstrating an association between exposure to chemicals or radiation and some ad-verse health effect cannot easily be offset by numerous studies failing to find such an association. Thus, for example, the more studies that are conducted looking for effects of electric and magnetic fields or other difficult-to-evaluate hazards, the more likely it is that these studies will increase public concerns, even if the majority of these studies fail to find any association with ill health (MacGregor et al., 1994; Morgan et al., 1985). In short, because evidence for lack of risk often carries little weight, risk-assessment studies tend to increase perceived risk.

Resolving Risk Conflicts: where do we go from here?

The psychometric paradigm has been employed internationally. One such international study by Slovic et al. (2000) helps frame two different solutions to resolving risk conflicts. This study compared public views of nuclear power in the United States, where this technology is resisted, and France, where nuclear energy appears to be embraced (France obtains about 80% of its electricity from nuclear power). Researchers found, to their surprise, that concerns about the risks from nuclear power and nuclear waste were high in France and were at least as great there as in the U.S. Thus, perception of risk could not account for the different level of reliance on nuclear energy in the two countries. Further analysis of the survey data uncovered a number of differences that might be important in explaining the difference between France and the U.S. Specifically, the French:

saw greater need for nuclear power and greater economic benefit from it;

had greater trust in scientists, industry, and government officials who design, build, operate, and regulate nuclear power plants;

were more likely to believe that decision-making authority should reside with the experts and government authorities, rather than with the people.

These findings point to some important differences between the workings of democracy in the U.S. and France and the effects of different "democratic models" on acceptance of risks. One such model relies primarily on technical solutions to resolving risk conflicts; the other looks to process-oriented solutions.

Technical Solutions to Risk Conflicts

There has been no shortage of high-level attention given to the risk conflicts described above. One prominent proposal by Justice Stephen Breyer (1993) attempts to break what he sees as a vicious circle of public perception, congressional overreaction, and conservative regulation that leads to obsessive and costly preoccupation with reducing negligible risks as well as to inconsistent standards among health and safety programs. Breyer sees public misperceptions of risk and low levels of mathematical understanding at the core of excessive regulatory response. His proposed solution is to create a small centralized administrative group charged with creating uniformity and rationality in highly technical areas of risk management. This group would be staffed by civil servants with experience in health and environmental agencies, Congress, and the Office of Management and Budget (OMB). A parallel is drawn between this group and the prestigious Conseil d'Etat in France.

Similar frustration with the costs of meeting public demands led the 104th Congress to introduce numerous bills designed to require all major new regulations to be justified by extensive risk assessments. Proponents of this legislation argued that such measures are necessary to ensure that regulations are based on "sound science'' and effectively reduce significant risks at reasonable costs.

The language of this proposed legislation reflects the traditional narrow view of risk and risk assessment based "only on the best reasonably available scientific data and scientific understanding." Agencies are further directed to develop a systematic program for external peer review using "expert bodies" or "other devices comprised of participants selected on the basis of their expertise relevant to the sciences involved" (United States, 1995, pp. 57-58). Public participation in this process is advocated, but no mechanisms for this are specified.

The proposals by Breyer and the 104th Congress are typical in their call for more and better technical analysis and expert oversight to rationalize risk management. There is no doubt that technical analysis is vital for making risk decisions better informed, more consistent, and more accountable. However, value conflicts and pervasive distrust in risk management cannot easily be reduced by technical analysis. Trying to address risk controversies primarily with more science is, in fact, likely to exacerbate conflict.

Process-Oriented Solutions

A major objective of this paper has been to demonstrate the complexity of risk and its assessment. To summarize the earlier discussions, danger is real, but risk is socially constructed. Risk assessment is inherently subjective and represents a blending of science and judgment with important psychological, social, cultural, and political factors. Finally, our social and democratic institutions, remarkable as they are in many respects, breed distrust in the risk arena.

Whoever controls the definition of risk controls the rational solution to the problem at hand. If you define risk one way, then one option will rise to the top as the most cost-effective or the safest or the best. If you define it another way, perhaps incorporating qualitative characteristics and other contextual factors, you will likely get a different ordering of your action solutions (Fischhoff et al., 1984). Defining risk is thus an exercise in power.

Scientific literacy and public education are important, but they are not central to risk controversies. The public is not irrational. The public is influenced by emotion and affect in a way that is both simple and sophisticated. So are scientists. The public is influenced by worldviews, ideologies, and values. So are scientists, particularly when they are working at the limits of their expertise.

The limitations of risk science, the importance and difficulty of maintaining trust, and the subjective and contextual nature of the risk game point to the need for a new approach-one that focuses on introducing more public participation into both risk assessment and risk decision making to make the decision process more democratic, improve the relevance and quality of technical analysis, and increase the legitimacy and public acceptance of the resulting decisions. Work by scholars and practitioners in Europe and North America has begun to lay the foundations for improved methods of public participation within deliberative decision processes that include negotiation, mediation, oversight committees, and other forms of public involvement (English, 1992; Kunreuther et al., 1993; National Research Council, 1996; Renn et al., 1991, 1995).

Recognizing interested and affected citizens as legitimate partners in the exercise of risk assessment is no short-term panacea for the problems of risk management. It won't be easy and it isn't guaranteed. But serious attention to participation and process issues may, in the long run, lead to more satisfying and successful ways to manage risk.

Recebido em: 12/01/2010

Aprovado em: 31/03/2010

- ALHAKAMI, A. S., SLOVIC, P. A psychological study of the inverse relationship between perceived risk and perceived benefit. Risk Analysis New Jersey, v. 14, n. 6 p. 1085-1096, dec. 1994.

- ALPER, J. The pipeline is leaking women all the way along. Science Washington, DC, v. 260, n. 5106, 409-411, april 1993.

- BARKE, R., JENKINS-SMITH, H., SLOVIC, P. Risk perceptions of men and women scientists. Social Science Quarterly, Oklahoma, v. 78, n. 1, p. 167-176, 1997.

- BAUMER, T. L. Research on fear of crime in the United States. Victimology, v. 3, p. 254-264, 1978.

- BREYER, S. Breaking the vicious circle: toward effective risk regulation. Cambridge, MA: Harvard University Press, 1993.

- BURNS, W. et al. Social amplification of risk: an empirical study. Carson City, N.V.: Nevada Agency for Nuclear Projects Nuclear Waste Project Office, 1990.

- COHEN, B. L. Criteria for technology acceptability. Risk Analysis, New Jersey, v. 5, n. 1, p. 1-3, mar. 1985.

- COVELLO, V. T. et al.The analysis of actual versus perceived risks New York: Plenum, 1983.

- CROUCH, E. A. C.; WILSON, R.Risk/Benefit analysis . Cambridge, MA: Ballinger, 1982.

- DUPONT, R. L. Nuclear phobia: phobic thinking about nuclear power. Washington, DC: The Media Institute, 1980.

- ENGLISH, M. R. Siting low-level radioactive waste disposal facilities: the public policy dilemma. New York: Quorum, 1992.

- FENTON, D. How a PR firm executed the Alar scare. Wall Street Journal, New York, p. A 22, 3 oct. 1989.

- FESSENDEN-RADEN, J.; FITCHEN, J. M.; HEATH, J. S. Providing risk information in communities: factors influencing what is heard and accepted. Science Technology and Human Values, California, v. 12, p. 94-101, 1987.

- FINUCANE, M. L. et al. The affect heuristic in judgments of risks and benefits. Journal of Behavioral Decision Making, Malden, MA, v. 13, n. 1, p. 1-17, jan.-mar. 2000.

- FISCHHOFF, B. et al. How safe is safe enough? A psychometric study of attitudes toward technological risks and benefits. Policy Sciences , New York, v. 9, n. 2, p. 127-152, april, 1978.

- FISCHHOFF, B.; WATSON, S.; HOPE, C. Defining risk. Policy Sciences , New York, v. 17, n. 2, 123-139, oct. 1984.

- FLYNN, J.; SLOVIC, P.; MERTZ, C. K. Gender, race, and perception of environmental health risks. Risk Analysis, New Jersey, v. 14, n. 6, p. 1101-1108, 1994.

- FUNTOWICZ, S. O.; RAVETZ, J. R. Three types of risk assessment and the emergence of post-normal science. In: KRIMSKY, S.; GOLDING, D. (ed.). Social theories of risk . Westport, CT: Praeger, 1992. p. 251-273.

- GILLIGAN, C. In a different voice: psychological theory and women's development. Cambridge, MA: Harvard University, 1982.

- GREGORY, R.; FLYNN, J.; SLOVIC, P. Technological stigma. American Scientist, Research Triangle Park, NC, v. 83, p. 220-223, 1995.

- KASPERSON, R. E. et al. The social amplification of risk: a conceptual framework. Risk Analysis, New Jersey, v. 8, p. 177-187, 1988.

- KRAUS, N.; MALMFORS, T.; SLOVIC, P. Intuitive toxicology: expert and lay judgments of chemical risks. Risk Analysis, New Jersey, v. 12, p. 215-232, 1992.

- KRIMSKY, S.; GOLDING, D. Social theories of risk Westport, CT: Praeger-Greenwood, 1992.

- KUNREUTHER, H.; FITZGERALD, K.; AARTS, T. D. Siting noxious facilities: a test of the facility siting credo. Risk Analysis , New Jersey, v. 13, p. 301-318, 1993.

- LICHTENBERG, J.;MACLEAN, D. Is good news no news? The Geneva Papers on Risk and Insurance, Geneva, Switzerland, v. 17, n. 3, p. 362-365, july 1992.

- MACGREGOR, D.; SLOVIC, P.; MORGAN, M. G. Perception of risks from electromagnetic fields: a psychometric evaluation of a risk-communication approach. Risk Analysis, New Jersey, v. 14, n. 5, p. 815-828, 1994.

- MAZUR, A. The dynamics of technical controversy Washington, DC: Communications Press, 1981.

- MERCHANT, C. The death of nature: women, ecology, and the scientific revolution. New York: Harper & Row, 1980.

- MITCHELL, M. L. The impact of external parties on brand-name capital: the 1982 Tylenol poisonings and subsequent cases. Economic Inquiry, Malden, MA, v. 27, n. 4, p. 601-618, oct. 1989.

- MOORE, J. A. Speaking of data: the Alar controversy. EPA Journal, v. 15, p. 5-9, may 1989.

- MORGAN, M. G. et al. Powerline frequency electric and magnetic fields: a pilot study of risk perception. Risk Analysis, New Jersey, v. 5, p. 139-149, 1985.

- NATIONAL RESEARCH COUNCIL (NRC). Committee on Risk Characterization. Understanding risk: informing decisions in a democratic society. Washington, DC: National Academy Press, 1996.

- NATIONAL RESEARCH COUNCIL (NRC). Committee on Risk Perception and Communication. Improving risk communication Washington, DC: National Academy Press, 1989.

- OTWAY, H. Public wisdom, expert fallibility: Toward a contextual theory of risk. In: KRIMSKY, S.; GOLDING, D. (ed.). Social theories of risk Westport, CT: Praeger, 1992. p. 215-228.

- PETERS, E.; SLOVIC, P. The role of affect and worldviews as orienting dispositions in the perception and acceptance of nuclear power. Journal of Applied Social Psychology, Malden, MA, v. 26, n. 16, p. 1427-1453, aug. 1996.

- PIDGEON, N. et al. Risk perception. In: The Royal Society Study Group (ed.), Risk: analysis, perception and management. London: The Royal Society, 1992. p. 89-134.

- RENN, O.; WEBLER, T., JOHNSON, B. B. Public participation in hazard management: the use of citizen panels in the U.S. Risk: healt safety & environment, Concord, NH, v. 2, n. 3, p. 197-226, 1991.

- RENN, O.; WEBLER, T.; WIEDEMANN, P. Fairness and competence in citizen participation: evaluating models for environmental discourse. Dordrecht, The Netherlands: Kluwer Academic, 1995.

- RIGER, S.; GORDON, M. T.; LEBAILLY, R. Women's fear of crime: from blaming to restricting the victim. Victimology, v. 3, p. 274-284, 1978.

- SLOVIC, P. Perception of risk. Science, Washington, DC, v. 236, n. 4799, p. 280-285, april 1987.

- SLOVIC, P. Trust, emotion, sex, politics, and science: surveying the risk-assessment battlefield. In: BAZERMAN, M. H. et al. (ed.), . San Francisco: New Lexington, 1997. p. 277-313.

- SLOVIC, P. Perceived risk, trust, and democracy. Environment, ethics, and behavior, New Jersey, v. 13, n. 6, p. 675-682, dec. 1993.

- SLOVIC, P.; FISCHHOFF, B.; LICHTENSTEIN, S. Rating the risks. Risk Analysis, Philadelphia, PA, v. 21, n. 3, p. 14-20, 36-39, 1979.

- SLOVIC, P.; FISCHHOFF, B.; LICHTENSTEIN, S. Behavioral decision theory perspectives on risk and safety. Environment, Amsterdam, Netherlands, v. 56, n. 1-3, p. 183-203, aug. 1984.

- SLOVIC, P.; FISCHHOFF , B.; LICHTENSTEIN, S. Characterizing perceived risk. In: KATES, R. W.; HOHENEMSER, C.; KASPERSON, J. X. (ed.). Acta Psychologica: managing the hazards of technology. Boulder, CO: Westview, 1985. p. 91-123.

- SLOVIC, P.; FLYNN, J.; LAYMAN, M. Perceived risk, trust, and the politics of nuclear waste. Perilous progress, Washington, DC, v. 254, p. 1603-1607, 1991a.

- SLOVIC, P. et al. Nuclear power and the public: a comparative study of risk perception in France and the United States. In: RENN, O.; ROHRMANN, B. (ed.). Cross-cultural risk perception: a survey of empirical studies. Amsterdam: Kluwer Academic Press, 2000. p. 55-102.

- SLOVIC, P. et al. Perceived risk, stigma, and potential economic impacts of a high-level nuclear waste repository in Nevada. Risk Analysis, New Jersey, v. 11, n. 4, p. 683-696, dec. 1991b.

- SLOVIC, P. et al. Evaluating chemical risks: results of a survey of the British Toxicology Society. Human & Experimental Toxicology, Thousand Oaks, CA, v. 16, n. 6, p. 289-304, june 1997.

- STEGER, M. A. E.; WITT, S. L. Gender differences in environmental orientations: a comparison of publics and activists in Canada and the U.S. The Western Political Quarterly, Salt Lake City, UT, v. 42, n. 4, p. 627-649, dec. 1989.

- TENGS, T. O. et al. Five-hundred life-saving interventions and their cost effectiveness. Risk Analysis, New Jersey, v. 15, n. 3, p. 369-390, june 1995.

- UNITED STATES. Environmental Protection Agency. Office of Policy Analysis. Unfinished business: A comparative assessment of environmental problems. Washington, DC: EPA, 1987.

- UNITED STATES. Senate. The comprehensive regulatory reform act of 1995 Washington, DC: U.S. Government Printing Office, 1995.

- WEBSTER, N. Websters new twentieth century dictionary New York: Simon & Schuster, 1983.

- WEINER, R. F. Comment on Sheila Jasanoff's guest editorial. Risk Analysis, New Jersey, v. 13, v. 5, p. 495-496, oct. 1993.

- WYNNE, B. Risk and social learning: reification to engagement. In: KRIMSKY, S.; GOLDING, D. (ed.). Social theories of risk Westport, CT: Praeger, p. 275-300, 1992.

Publication Dates

-

Publication in this collection

10 Jan 2011 -

Date of issue

Dec 2010

History

-

Accepted

31 Mar 2010 -

Received

12 Jan 2010