Abstract

This study presents a systematic review of validity evidence for neuropsychological batteries. Studies published in international databases between 2005 and 2012 were examined. Considering the specificity of neuropsychological batteries, the aim of the study was to review the statistical analyses and procedures that have been used to validate these instruments. A total of 1,218 abstracts were read, of which 147 involved studies of neuropsychological batteries or tests that evaluated at least three cognitive processes. The full text of each article was analyzed according to publication year, focal instrument of the study, sample type, sample age range, characterization of the participants, and procedures and analyses used to provide evidence of validity. The results showed that the studies primarily analyzed patterns of convergence and divergence by correlating the instruments with other tests. Measures of reliability, such as internal consistency and test-retest reliability, were also frequently employed. To provide evidence of relationships between test scores and external criteria, the most common procedures were evaluations of sensitivity and specificity, and comparisons were made between contrasting groups. The statistical analyses frequently used were Receiver Operating Characteristic analysis, Pearson correlation, and Cronbach's alpha. We discuss the necessity of incorporating both classic and modern psychometric procedures and presenting a broader scope of validity evidence, which would represent progress in this field. Finally, we hope our findings will help researchers better plan the validation process for new neuropsychological instruments and batteries.

neuropsychological assessment; validity; reliability; systematic review

A systematic review of validity procedures used in neuropsychological batteries

Josiane PawlowskiI; Joice Dickel SegabinaziII; Flávia WagnerII; Denise Ruschel BandeiraII

IUniversidade Federal do Rio de Janeiro, Rio de Janeiro, RJ, Brazil

IIUniversidade Federal do Rio Grande do Sul, Porto Alegre, RS, Brazil

Correspondence Correspondence: Josiane Pawlowski Universidade Federal do Rio de Janeiro, Instituto de Psicologia, Departamento de Psicometria Av. Pasteur, 250, Pavilhão Nilton Campos, Praia Vermelha, Urca Rio de Janeiro, 22290-250, Brazil Phone: +55 21 38735339 E-mail: josipski@gmail.com

ABSTRACT

This study presents a systematic review of validity evidence for neuropsychological batteries. Studies published in international databases between 2005 and 2012 were examined. Considering the specificity of neuropsychological batteries, the aim of the study was to review the statistical analyses and procedures that have been used to validate these instruments. A total of 1,218 abstracts were read, of which 147 involved studies of neuropsychological batteries or tests that evaluated at least three cognitive processes. The full text of each article was analyzed according to publication year, focal instrument of the study, sample type, sample age range, characterization of the participants, and procedures and analyses used to provide evidence of validity. The results showed that the studies primarily analyzed patterns of convergence and divergence by correlating the instruments with other tests. Measures of reliability, such as internal consistency and test-retest reliability, were also frequently employed. To provide evidence of relationships between test scores and external criteria, the most common procedures were evaluations of sensitivity and specificity, and comparisons were made between contrasting groups. The statistical analyses frequently used were Receiver Operating Characteristic analysis, Pearson correlation, and Cronbach's alpha. We discuss the necessity of incorporating both classic and modern psychometric procedures and presenting a broader scope of validity evidence, which would represent progress in this field. Finally, we hope our findings will help researchers better plan the validation process for new neuropsychological instruments and batteries.

Keywords: neuropsychological assessment, validity, reliability, systematic review.

Introduction

Brazilian neuropsychology researchers are increasingly interested in developing and adapting instruments based on evidence of validity (Abrisqueta-Gomez, Ostrosky-Solis, Bertolucci, & Bueno, 2008; Caldas, Zunzunegui, Freire, & Guerra, 2012; Carod-Artal, Martínez-Martin, Kummer, & Ribeiro, 2008; Carvalho, Barbosa, & Caramelli, 2010; Fonseca, Salles, & Parente, 2008; Pawlowski, Fonseca, Salles, Parente, & Bandeira, 2008). The validation process for psychological instruments includes different procedures and statistical techniques to evaluate psychometric properties (Pasquali, 2010; Urbina, 2004). Detailed procedures and techniques are supplied in the Standards for Educational and Psychological Testing (American Educational Research Association, 1999). Several statistical software programs can be used for instrument validation, which can be observed in articles and test manuals. However, the applicability of the techniques depends on the characteristics of the instrument that is being validated. With regard to neuropsychological batteries, instruments show variations in the type and quality of the test items, number of examined cognitive functions, and measured construct.

Many neuropsychological instruments include tasks that evaluate different cognitive domains, and they require distinct validation techniques compared with regular scales, such as the Likert scale. Some procedures or statistical analyses can be difficult to apply in specific situations, such as when the number of items is limited or when a large number of subjects is required but the sample is hard to access. Comparisons of neuropsychological testing research methods and specific guidelines for psychological and neuropsychological test development contribute to the refinement of interpretative, clinical, and psychometric methods (Hunsley, 2009; Brooks, Strauss, Sherman, Iverson, & Slick, 2009; Blakesley et al., 2009). Consistent with most validity frameworks and the current test standards (American Educational Research Association, 1999), tests differ with regard to the categories that are most crucial to test meaning, depending on the test's intended use (Embretson, 2007). A brief discussion of psychometric procedures that are used to provide evidence of the validity of neuropsychological assessment batteries can be found in Pawlowski, Trentini, & Bandeira (2007).

Considering the specificity of neuropsychological batteries, the aim of the present study was to review the procedures and statistical analyses that have been used to study evidence of the validity of these tests. This study can contribute to the selection of appropriate statistical techniques and inform professionals about better instrument validation procedures.

Materials and Methods

Abstracts and articles published in indexed periodicals and international databases between 2005 and 2012 were reviewed. The selected publications simultaneously considered neuropsychological assessment and validity.

Study type

The present research involved an integrated and systematic review (Fernández-Ríos, & Buela-Casal, 2009). Beginning from a set of quantitative studies, the aim was to integrate information about analyses that have examined evidence of the validity of neuropsychological batteries.

Procedures

The PsycINFO and MEDLINE (EBSCO) databases were searched on May 3, 2013. The terms "neuropsychological assessment" and "validity" (key words used in Thesaurus) were used to search for published abstracts between January 2005 and December 2012. The search was conducted without publication language restrictions. A database was created with all abstract titles, and duplication between MEDLINE and PsycINFO databases was removed. Abstracts that involved investigations of evidence of the validity of neuropsychological batteries and assessed at least three cognitive processes were included. For example, the cognitive processes could include memory, language, and praxis (i.e., motor planning). Three independent judges classified each abstract according to the name of the instrument, study type (e.g., empirical, theoretical, or review), instrument type (e.g., battery, single task, or scale), the number of cognitive processes evaluated by the instrument, and whether the study evaluated any evidence of validity. Each abstract was read by at least two of the three judges. In case of disagreement, the abstract was evaluated by all three judges until consensus was reached. The selected abstracts were read again. Articles that assessed evidence of the validity of computerized batteries were excluded. The complete article of each selected abstract was read and classified according to the following criteria: publication year, sample type (clinical or healthy), sample age group (children, teenagers, adults, and elderly), clinical pathology (in the case of clinical samples), and procedures and statistical analyses employed to provide evidence of validity.

Information analysis

Descriptive analyses (frequency and percentage) were performed to record the publication year, focal instrument of the study, sample type, sample age range, characterization of the participants, and type of procedure and statistical analysis employed to evaluate evidence of validity.

Results

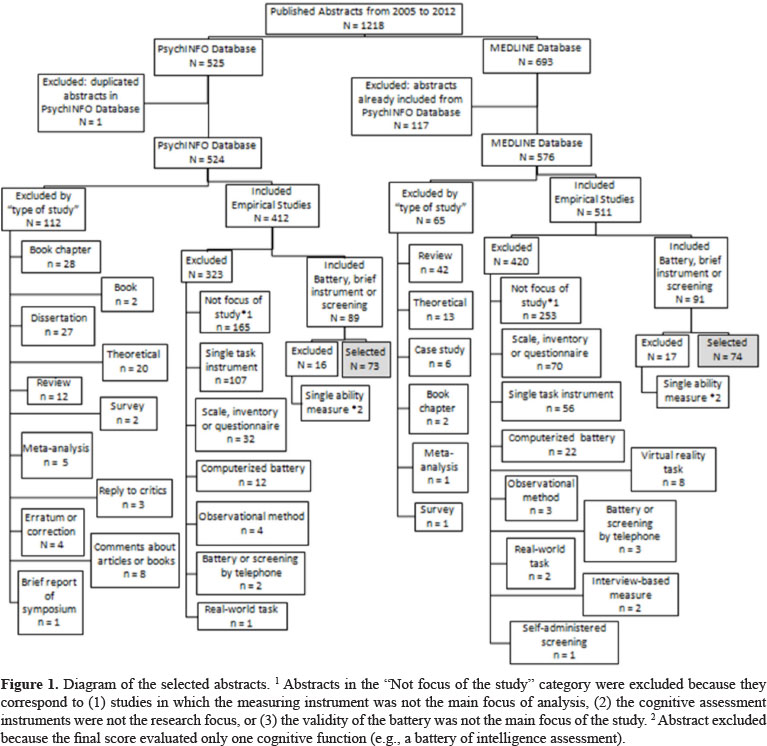

The search for articles with the simultaneous use of the key words "neuropsychological assessment" and "validity" resulted in 1,218 abstracts published in scientific journals between January 2005 and December 2012. A total of 525 abstracts were published in the PsycINFO database, and 693 abstracts were published in the MEDLINE database. Of the 693 abstracts in MEDLINE, 117 were also published in PsycINFO (one repetition was found in PsycINFO itself). Of the 1,100 total abstracts, 524 were from PsycINFO, and 576 were from MEDLINE. Figure 1 presents a detailed diagram of the selected abstracts.

Only studies of neuropsychological batteries or tests that evaluated at least three cognitive processes and included tasks with face-to-face or traditional paper-and-pencil administration were analyzed. The final selection included 147 abstracts (73 in PsycINFO and 74 in MEDLINE). The distribution of the 147 abstracts by publication year is presented in Figure 2, in which an increase in the number of studies in recent years was found, especially in 2010 and 2012. Because the full-text articles were unavailable for 15 abstracts, 132 articles were fully reviewed. Four abstracts were excluded because information about the analytical criteria was not present. The final review included 132 full-text articles and 11 abstracts. Detailed information for all 143 full-text articles and abstracts is presented in Table 1, including year of publication, journal, authors, quantity and type of participants (clinical and control/comparison), and instrument.

The instrument whose psychometric properties were most often analyzed by studies in this systematic review was the Repeatable Battery for the Assessment of Neuropsychological Status (RBANS), which was cited in 12.5% of the articles. Investigations of the psychometric properties of the Montreal Cognitive Assessment (MoCA) were found in 11.2% of the studies. The Mini-Mental State Examination (MMSE) appeared in 7% of the citations, half of which occurred in association with the MoCA. Addenbrooke's Cognitive Examination (ACE) and Addenbrooke's Cognitive Examination Revised (ACE-R) were cited in 5.6% of the articles. Other instruments that were cited were the Alzheimer's Disease Assessment Scale - Cognitive Part (ADAS-COG; 4.9%), the Consortium to Establish a Registry for Alzheimer's Disease (CERAD; 3.5%), and the Neuropsychological Assessment Battery (NAB; 4.2%).

With regard to sample type, 51.4% of the studies included both a clinical and healthy/control sample. A total of 35.4% of the studies exclusively examined clinical samples, and 13.2% focused on healthy populations. Concerning the age of the samples, 44.4% of the studies were performed with elderly participants, 22.9% included both adults and the elderly, and 18.8% exclusively included adults. Additionally, 4.2% included youth, adults, and the elderly, and 4.9% were performed exclusively with children. Only one study (.7%) included a sample of children, teenagers, and adults, and another study (.7%) exclusively analyzed teenagers. Identification of the age group was not possible in five studies (3.5%). Concerning clinical sample pathologies, frequencies and percentages are presented in Table 2. The clinical samples were predominantly composed of individuals diagnosed with dementia (18.5%), Alzheimer's disease (17.9%), and mild cognitive impairment (16.2%). Patients with acquired brain injury and cerebrovascular diseases were also assessed in a large number of studies (15.1%).

To evaluate the validity of the batteries, the articles incorporated from one to eight distinct procedures. Most of the studies completed two (25%), three (21.5%), or just one (20.8%) procedure. Four procedures were employed by 13.2% of the studies, five were employed by 10.4%, and six were employed by 6.3%. Only 1.4% of the studies presented seven procedures, and .7% presented eight procedures. The most frequently used procedures included the evaluation of sensitivity and specificity (17.6%), correlations with other tests (15.6%), comparisons between groups (12.3%), analyses of internal consistency (12.1%), test-retest reliability (8.6%), and factor structure analysis (8.6%). The procedures are presented in Table 3.

The most frequently used statistical analyses for neuropsychological battery validation were the Receiver Operating Characteristic (ROC) analysis (13.1%), Pearson product-moment correlation coefficient (12.9%), Cronbach's alpha coefficient (10.9%), analysis of variance or covariance (9.3%), and regression analysis (7.2%). The frequencies and percentages of the statistical analyses are presented in Table 4.

Discussion

This paper presents a systematic review of studies that assessed evidence of the validity of neuropsychological assessment batteries published in international databases between 2005 and 2012. The increase in the number of papers in recent years indicates a growing scientific concern about providing evidence of the validity of neuropsychological batteries.

The main findings demonstrate that the typical procedures and statistical analyses employed in psychological test validation are also present in neuropsychological battery validation studies. Specifically, sensitivity and specificity, correlations with other tests, comparisons between groups, reliability, and factor structure analysis were commonly employed. With regard to statistical techniques, the same was observed, with a major prevalence of ROC analysis, Pearson correlation, Cronbach's alpha, analysis of variance, and regression analysis.

The assessment of the validity of instrument scores usually considers sources of evidence of construct validity (American Educational Research Association, 1999; Embretson, 2007), which are also related to content, criteria, and patterns of convergence and divergence (Urbina, 2004). In this systematic review, the studies primarily assessed different sources of validity by searching for patterns of convergence and divergence.

The main sources of patterns of convergence and divergence were correlations with other tests and measures of reliability, such as internal consistency and test-retest reliability. The pattern of correlations with other measures, considering theoretical relationships, is frequently employed by researchers as a source of evidence of construct validity (Westen, & Rosenthal, 2003). In addition to correlation studies that provide additional support for the validity of an instrument, Urbina (2004) noted that an instrument should also measure the construct in a precise and reliable way in order to be valid. This is consistent with the idea of minimizing the role of external sources of validity and emphasizing internal sources of evidence to establish test meaning. Such procedures would include item design principles, domain structure, item interrelationship, and reliability (Embretson, 2007).

Consistent with this notion, factor structure and correlation among instrument subscales should also be investigated. Factor analysis can contribute to investigations of the dimensionality of a particular assessment instrument or battery or to confirm the theory that underlies the battery by considering the identified weightings of the variables (Floyd, & Widaman, 1995; Schmitt, Livingston, Smernoff, Reese, Hafer, & Harris, 2010). Considering that a neuropsychological battery is composed of tasks or tests with an unequal number of items through which different constructs are examined, factor analysis is not recommended to assess test validity when a small number of items are present because there must be at least three variables for each dimension of an instrument to endorse the use of this technique (Brown, 2006; Fabrigar, Wegener, MacCallum, & Strahan, 1999).

Pearson product-moment correlation coefficient and Cronbach's alpha are statistical tests often used to estimate patterns of the convergence and divergence of psychological and educational instruments (Creswell, 2008). The frequent use of these techniques emphasizes the popularity of traditional procedures in the validity assessment of instruments. Few studies employed alternative models from classical test theory, such as Item Response Theory (IRT). In IRT, different properties of items are evaluated to provide more complete characterizations of the items, the instrument as a whole, and the performance of each subject. Thus, these models offer improved accuracy and precision in neuropsychological evaluation tests; however, IRT has had a limited impact on neuropsychological tests, possibly because this type of use has only been recently adopted (Thomas, 2011). The use of IRT to study the validity of neuropsychological tests could contribute to selecting the most representative items for evaluating a specific cognitive function. Item Response Theory also has the potential to identify items with superior discriminatory power in relation to specific deficits (Pedraza et al., 2009; Schultz-Larsen, Kreiner, & Lomholt, 2007).

Regarding criterion validity, Urbina (2004) suggests assessing the precision of decisions related to concurrent and predictive validation. Concurrent validation can be achieved by correlating test scores with the predicted criteria. By studying differences between clinical groups and controls (or healthy samples), information about the precision of concurrent validation decisions can be obtained. In the present review, the evaluation of sensitivity and specificity and comparisons between contrasting groups were most often applied as evidence of concurrent validity. For evaluations of sensitivity and specificity, an analysis of the ROC curve was frequently employed. Receiver operating characteristic curve analysis contributes to the diagnostic validation of neuropsychological instruments by evaluating the ability of the instrument to predict false positives in relation to a diagnosis or specific criterion (Burgueño, García-Bastos, & González-Buitrago, 1995).

With regard to sample types, many studies analyzed groups of patients diagnosed with dementia, Alzheimer's disease, and mild cognitive impairment. One common characteristic between these groups is memory loss as the main symptom, although these patients remain heterogeneous in other ways (Pike, Rowe, Moss, & Savage, 2008). Generally, when a patient undergoes a neuropsychological assessment, decreased memory capacity is a common complaint. The choice of these clinical groups may be related to this pattern. The predominance of studies with elderly samples, which included more than half of the articles in this review, also supports this pattern.

Regression analysis also stands out in studies that assessed an instrument's specificity, but most of its frequency was observed in analyses of the effect or influence of demographical variables on neuropsychological instruments. Other studies of the effect of demographic variables compared groups from distinct regions or cultures also using regression analyses. These studies have the potential to contribute to validity assessments of the cultural or incremental type (Mungas, Reed, Haan, & González, 2005a). Additionally, ecological validity can be assessed, which would include studies that compare patient test performance with their practical daily activities (Chaytor, & Schmitter-Edgecombe, 2003; Temple, Zgaljardic, Abreu, Seale, Ostir, & Ottenbacher, 2009).

Still with regard to criterion validity, a small number of studies investigated other forms of concurrent validity, such as an item's agreement with an external variable (i.e., the absence/presence of a deficit), the detection of clinical improvement using posttreatment scales, and the prediction of other test results. Few studies analyzed predictive validity, which refers to the evaluation of future criteria. The prediction of the capacity to return to work and the ability to predict future cognitive deficits can be considered future criteria. Survival analysis or the power of an instrument to predict future outcomes, such as death or institutionalization, was also employed in one of the studies (Cruz-Oliver, Malmstrom, Allen, Tumosa, & Morley, 2012). The limited number of studies that focused on future criteria corroborates the difficulty implementing viable predictive studies (Urbina, 2004). Despite the complexity implementing such studies, analyses that predict such factors as the patient's prognosis or capacity to return to work are necessary and viable for assessing the validity of neuropsychological tasks.

Specifying homogeneous criteria in clinical neuropsychology samples is a difficult goal to achieve (Benedet, 2003). Validity studies often include samples with a wide range of cognitive deficits with neurological involvement. This review indicates that researchers are looking for alternative groups of patients, which is highlighted by the presence of validity studies with samples of traumatic brain injury, cerebrovascular disease or stroke, schizophrenia, and Parkinson's disease. Notably, many neurological or psychiatric disorders, such as multiple sclerosis, attention-deficit/hyperactivity disorder, and bipolar disorder, are still under investigation and do not have a determined homogeneous profile of cognitive deficits. The lack of a homogeneous pattern of deficits in such samples interferes with their viability in validity studies, which demands a solid symptom profile.

Evidence related to content validity was mentioned in a small number of the reviewed studies. Some procedures or analyses employed in item development or translation included interrater reliability, the percentage of agreement, and qualitative evaluation. Assessments of content validity could provide evidence of relevant and representative items of the different constructs that are being investigated (Urbina, 2004). One explanation for the absence of studies related to test content could be that these studies have been published in previous articles about instrument development or the original test manuals, rather than as standalone articles; the former often focus on more fundamental aspects of instrument development. Nonetheless, from a psychometric point of view, the evaluation of item representativeness remains important for ensuring the validity of neuropsychological instrument scores. This is especially true if we consider the complexity of the evaluated functions.

Some studies employed other statistical analyses or procedures to search for evidence of validity and present the relevance of data completeness, scaling assumptions, targeting, and effect size. The extent to which a scale's components are completed in the target sample and the percentage of people for whom reporting a single score is possible denote data completeness. Scaling assumptions determine whether summing subscales of the instrument to create a single scale score is appropriate. Targeting evaluates whether the range of cognitive performance measured by the battery corresponds to the range of the sample (Cano et al., 2010). Effect size correlations can provide convenient and informative indices of construct validity (Westen, & Rosenthal, 2003).

Considering the results of this review, the most common procedures refer to external sources of validity, such as correlations with other measures, sensitivity, and specificity. With regard to internal validity, reliability procedures are commonly employed, but few studies have emphasized item development or used modern techniques as validity procedures. In our view, a balance between external and internal sources of validity evidence could improve the psychometric quality of neuropsychological batteries. Additionally, more careful attention to item and test development according to standards from both classic and modern techniques is useful and viable for providing validity evidence for instruments that assess very diverse domains, such as neuropsychological batteries. Finally, this approach could also minimize the difficulty studying very heterogeneous samples, such as neurological patients.

Finally, some limitations should be considered when analyzing the results of this review. First, we did not include truncation or word variation when the search was conducted. Instead, we decided to rely on established keywords from the Thesaurus. Second, we decided to exclude computerized neuropsychological batteries because they have specifics that are beyond the scope of this review.

In conclusion, our study suggests that improving evidence of the validity of neuropsychological instruments is possible. Incorporating both classic and modern psychometric procedures and presenting a broader scope of validity evidence would represent progress in neuropsychological battery validation. By highlighting the most common procedures and statistical analyses employed in this context and the observed limitations, this study may help researchers better plan the validation process for new instruments in the field.

Received 13 November 2012

Received in revised form 23 September 2013

Accepted 24 September 2013

Available online 23 December 2013

Josiane Pawlowski, Universidade Federal do Rio de Janeiro, Instituto de Psicologia, Departamento de Psicometria, Rio de Janeiro, RJ, Brazil. Joice Dickel Segabinazi, Flávia Wagner, and Denise Ruschel Bandeira, Universidade Federal do Rio Grande do Sul, Programa de pós-graduação em Psicologia, Porto Alegre, RS, Brazil.

- Abedi, A., Malekpour, M., Oraizi, H., Faramarzi, S., & Paghale, S. J. (2012). Standardization of the neuropsychological test of NEPSY on 3-4 years old children. Iranian Journal of Psychiatry and Clinical Psychology, 18(1), 52-60.

- Abizanda, P., López-Ramos, B., Romero, L., Sánchez-Jurado, P. M., León, M., Martín-Sebastiá, E., & López-Jiménez, E. (2009). Differentiation between mild cognitive impairment and Alzheimer's disease using the FMLL Mini-Battery. Dementia and Geriatric Cognitive Disorders, 28(2), 179-186.

- Abrisqueta-Gomez, J., Ostrosky-Solis, F., Bertolucci, P. H. F., & Bueno, O. F. A. (2008). Applicability of the Abbreviated Neuropsychologic Battery (NEUROPSI) in Alzheimer disease patients. Alzheimer Disease & Associated Disorders, 22(1), 72-78.

- Adrián, J. A., Hermoso, P., Buiza, J. J., Rodríguez-Parra, M. J., & González, M. (2008). Estudio piloto de la validez, fiabilidad y valores de referencia normativos de la escala PRO-NEURO en adultos mayores sin alteraciones cognitivas. Neurología, 23(5), 275-287.

- American Educational Research Association (1999). Standards for Educational and Psychological Testing. Washington, DC: American Educational Research Association.

- Azizian, A., Yeghiyan, M., Ishkhanyan, B., Manukyan, Y., & Khandanyan, L. (2011). Clinical validity of the Repeatable Battery for the Assessment of Neuropsychological Status among patients with schizophrenia in the Republic of Armenia. Archives of Clinical Neuropsychology, 26(2), 89-97.

- Bangirana, P., Seggane-Musisi, Allebeck, P., Giordani, B., John, C. C., Opoka, O.R., Byarugaba, J., Ehnval, I. A., Boivin, M. J. (2009). A preliminary examination of the construct validity of the KABC-II in Ugandan children with a history of cerebral malaria. African Health Sciences, 9(3), 186-192.

- Barekatain, M., Walterfang, M., Behdad, M., Tavakkoli, M., Mahvari, J., Maracy, M., & Velakoulis, D. (2010). Validity and reliability of the Persian language version of the neuropsychiatry unit cognitive assessment tool. Dementia and Geriatric Cognitive Disorders, 29(6), 516-522.

- Barr, W. B., Bender, H. A., Morrison, C., Cruz-Laureano, D., Vazquez, B., & Kuzniecky, R. (2009). Diagnostic validity of a neuropsychological test battery for Hispanic patients with epilepsy. Epilepsy & Behavior, 16(3), 479-483.

- Basic, D., Rowland, J. T., Conforti, D. A., Vrantsidis, F., Hill, K., LoGiudice, D., Harry, J., Lucero, K., & Prowse, R. J. (2009). The validity of the Rowland Universal Dementia Assessment Scale (RUDAS) in a multicultural cohort of community-dwelling older persons with early dementia. Alzheimer Disease and Associated Disorders, 23(2), 124-129.

- Bauer, L., Pozehl, B., Hertzog, M., Johnson, J., Zimmerman, L., & Filipi, M. (2012). A brief neuropsychological battery for use in the chronic heart failure population. European Journal of Cardiovascular Nursing, 11(2), 223-230.

- Bender, H. A., Cole, J.R., Aponte-Samalot, M., Cruz-Laureano, D., Myers, L., Vazquez, B. R., & Barr, W. B. (2009). Construct validity of the Neuropsychological Screening Battery for Hispanics (NeSBHIS) in a neurological sample. Journal of the International Neuropsychological Society, 15(2), 217-224.

- Benedet, M. J. (2003). Metodología de la investigación básica en neuropsicología cognitiva. Revista Neurología, 36(5), 457-466.

- Benedict, R. H. B., Cookfair, D., Gavett, R., Gunther, M., Munschauer, F., Garg, N., & Weinstock-Guttman, B. (2006). Validity of the minimal assessment of cognitive function in multiple sclerosis (MACFIMS). Journal of the International Neuropsychological Society, 12(4), 549-558.

- Blakesley, R. E., Mazumdar, S., Dew, M. A., Houck, P. R., Tang, G., Reynolds, C. F., 3rd, & Butters, M. A. (2009). Comparisons of methods for multiple hypothesis testing in neuropsychological research. Neuropsychology, 23(2), 255-264.

- Bralet, M. C., Falissard, B., Neveu, X., Lucas-Ross, M., Eskenazi, A. M., & Keefe, R. S. (2007). Validation of the French version of the BACS (the Brief Assessment of Cognition in Schizophrenia) among 50 French schizophrenic patients. European Psychiatry, 22(6), 365-370.

- Brooks, B. L., Strauss, E., Sherman, E. M. S., Iverson, G. L., Slick, D. J. (2009). Developments in neuropsychological assessment: refining psychometric and clinical interpretive methods. Canadian Psychology/Psychologie Canadienne, 50(3), 196-209.

- Brown, T. A. (2006). Confirmatory factor analysis for applied research New York: Guilford Press.

- Brunner, H. I., Ruth, N. M., German, A., Nelson, S., Passo, M. H., Roebuck-Spencer, T., Ying, J., & Ris, D. (2007). Initial validation of the Pediatric Automated Neuropsychological Assessment Metrics for childhood-onset systemic lupus erythematosus. Arthritis and Rheumatism, 57(7), 1174-1182.

- Bugalho, P., & Vale, J. (2011). Brief cognitive assessment in the early stages of Parkinson disease. Cognitive and Behavioral Neurology, 24(4), 169-173.

- Burgueño, M. J., García-Bastos, J. L., & González-Buitrago, J. M. (1995). Las curvas ROC en la evaluación de las pruebas diagnósticas. Medicina Clinica, 104(17), 661-670.

- Caldas, V. V., Zunzunegui, M. V., Freire, A. N., & Guerra, R. O. (2012). Translation, cultural adaptation and psychometric evaluation of the Leganés cognitive test in a low educated elderly Brazilian population. Arquivos de Neuro-Psiquiatria, 70(1), 22-27.

- Cano, S. J., Posner, H. B., Moline, M. L., Hurt, S. W., Swartz, J., Hsu, T., & Hobart, J. C. (2010). The ADAS-cog in Alzheimer's disease clinical trials: psychometric evaluation of the sum and its parts. Journal of Neurology, Neurosurgery, and Psychiatry, 81(12), 1363-1368.

- Carod-Artal, F.J., Martínez-Martin, P., Kummer, W., & Ribeiro, L. S. (2008). Psychometric attributes of the SCOPA-COG Brazilian version. Movement Disorders, 23(1), 81-87.

- Carvalho, V. A., Barbosa, M. T., & Caramelli, P. (2010). Brazilian version of the Addenbrooke Cognitive Examination-revised in the diagnosis of mild Alzheimer disease. Cognitive and Behavioral Neurology, 23(1), 8-13.

- Chaytor, N., & Schmitter-Edgecombe, M. (2003). The ecological validity of neuropsychological tests: a review of the literature on everyday cognitive skills. Neuropsychology Review, 13(4), 181-197.

- Cheng, Y., Li, C.B., Wu, W.Y., & Department of Psychiatry (2009). Reliability and validity of the Chinese version of Repeatable Battery for the Assessment of Neuropsychological Status (RBANS) in community elderly. Chinese Journal of Clinical Psychology, 17(5), 535-537.

- Cheng, Y., Wu, W., Wang, J., Feng, W., Wu, X., & Li, C. (2011). Reliability and validity of the Repeatable Battery for the Assessment of Neuropsychological Status in community-dwelling elderly. Archives of Medical Science, 7(5), 850-857.

- Choe, J. Y., Youn, J. C., Park, J. H., Park, I. S., Jeong, J. W., Lee, W. H., Lee, S. B., Park, Y. S., Jhoo, J. H., Lee, D. Y., & Kim, K. W. (2008). The Severe Cognitive Impairment Rating Scale: an instrument for the assessment of cognition in moderate to severe dementia patients. Dementia and Geriatric Cognitive Disorders, 25(4), 321-328.

- Choi, S. H., Shim, Y. S., Ryu, S. H., Ryu, H. J., Lee, D. W., Lee, J. Y., Jeong, J. H., & Han, S. H. (2011). Validation of the Literacy Independent Cognitive Assessment. International Psychogeriatrics, 23(4), 593-601.

- Copersino, M. L., Schretlen, D. J., Fitzmaurice, G. M., Lukas, S. E., Faberman, J., Sokoloff, J., & Weiss, R. D. (2012). Effects of cognitive impairment on substance abuse treatment attendance: predictive validation of a brief cognitive screening measure. American Journal of Drug and Alcohol Abuse, 38(3), 246-250.

- Copersino, M. L., Fals-Stewart, W., Fitzmaurice, G., Schretlen, D. J., Sokoloff, J., & Weiss, R. D. (2009). Rapid cognitive screening of patients with substance use disorders. Experimental and Clinical Psychopharmacology, 17(5), 337-344.

- Creswell, J. W. (2008). Educational research: planning, conducting, and evaluating quantitative and qualitative research. Upper Saddle River, N.J.: Pearson/Merrill Prentice Hall.

- Cruz-Oliver, D. M., Malmstrom, T. K., Allen, C. M., Tumosa, N., & Morley, J. E. (2012). The Veterans Affairs Saint Louis University Mental Status exam (SLUMS exam) and the Mini-mental status exam as predictors of mortality and institutionalization. Journal of Nutrition, Health & Aging, 16(7), 636-641.

- Cuesta, M. J., Pino, O., Guilera, G., Rojo, J. E., Gómez-Benito, J., Purdon, S. E., Franco, M., Martínez-Arán, A., Segarra, N., Tabarés-Seisdedos, R., Vieta, E., Bernardo, M., Crespo-Facorro, B., Mesa, F., & Rejas, J. (2011). Brief cognitive assessment instruments in schizophrenia and bipolar patients, and healthy control subjects: a comparison study between the Brief Cognitive Assessment Tool for Schizophrenia (B-CATS) and the Screen for Cognitive Impairment in Psychiatry (SCIP). Schizophrenia Research, 130(1-3), 137-142.

- Custodio, N., Lira, D., Montesinos, R., Gleichgerrcht, E., & Manes, F. (2012). [Usefulness of the Addenbrooke's Cognitive Examination (Spanish version) in Peruvian patients with Alzheimer's disease and frontotemporal dementia]. Vertex, 23(103), 165-172.

- Damian, A. M., Jacobson, S. A., Hentz, J. G., Belden, C. M., Shill, H. A., Sabbagh, M. N., Caviness, J. N., & Adler, C. H. (2011). The Montreal Cognitive Assessment and the Mini-Mental State Examination as screening instruments for cognitive impairment: item analyses and threshold scores. Dementia and Geriatric Cognitive Disorders, 31(2), 126-131.

- Darvesh, S., Leach, L., Black, S. E., Kaplan, E., & Freedman, M. (2005). The Behavioural Neurology Assessment. Canadian Journal of Neurological Sciences, 32(2), 167-177.

- de Jonghe, J. F. M., Wetzels, R. B., Mulders, A., Zuidema, S. U., & Koopmans, R. T. C. M. (2009). Validity of the Severe Impairment Battery Short Version. Journal of Neurology, Neurosurgery & Psychiatry, 80(9), 954-959.

- de Leonni Stanonik, M., Licata, C. A., Walton, N. C., Lounsbury, J. W., Hutson, R. K., & Dougherty, J. H., Jr. (2005). The Self Test: a screening tool for dementia requiring minimal supervision. International Psychogeriatrics, 17(4), 669-678.

- del Ser, T., Sánchez-Sánchez, F., Garcia de Yébenes, M. J., Otero, A., & Munoz, D. G. (2006). Validation of the Seven-Minute Screen Neurocognitive Battery for the diagnosis of dementia in a Spanish population-based sample. Dementia and Geriatric Cognitive Disorders, 22(5-6), 454-464.

- Deng, C. P., Liu, M., Wei, W., Chan, R. C., & Das, J. P. (2011). Latent factor structure of the Das-Naglieri Cognitive Assessment System: a confirmatory factor analysis in a Chinese setting. Research In Developmental Disabilities, 32(5), 1988-1997.

- Donders, J., & Levitt, T. (2012). Criterion validity of the Neuropsychological Assessment Battery after traumatic brain injury. Archives of Clinical Neuropsychology, 27(4), 440-445.

- Dong, Y., Lee, W. Y., Basri, N. A., Collinson, S. L., Merchant, R. A., Venketasubramanian, N., & Chen, C. L. (2012a). The Montreal Cognitive Assessment is superior to the Mini-Mental State Examination in detecting patients at higher risk of dementia. International Psychogeriatrics, 24(11), 1749-1755.

- Dong, Y., Venketasubramanian, N., Chan, B. P., Sharma, V. K., Slavin, M. J., Collinson, S. L., Sachdev, P., Chan, Y. H., & Chen, C. L. (2012b). Brief screening tests during acute admission in patients with mild stroke are predictive of vascular cognitive impairment 3-6 months after stroke. Journal of Neurology, Neurosurgery, and Psychiatry, 83(6), 580-585.

- Drótos, G., Pákáski, M., Papp, E., & Kálmán, J. (2012). [Is it pseudo-dementia? The validation of the Adas-Cog questionnaire in Hungary]. Psychiatria Hungarica: A Magyar Pszichiátriai Társaság Tudományos Folyóirata, 27(2), 82-91.

- Duff, K., Humphreys Clark, J. D., O'Bryant, S. E., Mold, J. W., Schiffer, R. B., & Sutker, P. B. (2008). Utility of the RBANS in detecting cognitive impairment associated with Alzheimer's disease: sensitivity, specificity, and positive and negative predictive powers. Archives of Clinical Neuropsychology, 23(5), 603-612.

- Duff, K., Patton, D.E., Schoenberg, M. R., Mold, J., Scott, J. G., & Adams, R. L. (2011a). Intersubtest discrepancies on the RBANS: results from the OKLAHOMA Study. Applied Neuropsychology, 18(2), 79-85.

- Duff, K., Schoenberg, M. R., Mold, J. W., Scott, J. G., & Adams, R. L. (2011b). Gender differences on the Repeatable Battery for the Assessment of Neuropsychological Status subtests in older adults: baseline and retest data. Journal of Clinical and Experimental Neuropsychology, 33(4), 448-455.

- Dusankova, J. B., Kalincik, T., Havrdova, E., & Benedict, R. H. (2012). Cross cultural validation of the Minimal Assessment of Cognitive Function in Multiple Sclerosis (MACFIMS) and the Brief International Cognitive Assessment for Multiple Sclerosis (BICAMS). Clinical Neuropsychologist, 26(7), 1186-1200.

- Edgin, J. O., Mason, G. M., Allman, M. J., Capone, G. T., Deleon, I., Maslen, C., Reeves, R. H., Sherman, S. L., & Nadel, L. (2010). Development and validation of the Arizona Cognitive Test Battery for Down syndrome. Journal of Neurodevelopmental Disorders, 2(3), 149-164.

- Embretson, S. E. (2007). Construct validity: a universal validity system or just another test evaluation procedure? Educational Researcher, 36(8), 449-455.

- Ericsson, I., Malmberg, B., Langworth, S., Haglund, A., & Almborg, A. H. (2011). KUD: a scale for clinical evaluation of moderate-to-severe dementia. Journal of Clinical Nursing, 20(11-12), 1542-1552.

- Eshaghi, A., Riyahi-Alam, S., Roostaei, T., Haeri, G., Aghsaei, A., Aidi, M. R., Pouretemad, H. R., Zarei, M., Farhang, S., Saeedi, R., Nazeri, A., Ganjgahi, H., Etesam, F., Azimi, A. R., Benedict, R. H., & Sahraian, M. A. (2012). Validity and reliability of a Persian translation of the Minimal Assessment of Cognitive Function in Multiple Sclerosis (MACFIMS). Clinical Neuropsychologist, 26(6), 975-984.

- Fabrigar, L. R., Wegener, D. T., MacCallum, R. C., & Strahan, E. J. (1999). Evaluating the use of exploratory factor analysis in psychological research. Psychological Methods, 4(3), 272-299.

- Fernández-Ríos, L., & Buela-Casal, G. (2009). Standards for the preparation and writing of psychology review articles. International Journal of Clinical and Health Psychology, 9(2), 329-344.

- Ferreira, I. S., Simões, M. R., & Marôco, J. (2012). The Addenbrooke's Cognitive Examination Revised as a potential screening test for elderly drivers. Accident Analysis and Prevention, 49, 278-286.

- Floyd, F. J., & Widaman, K. F. (1995). Factor analysis in the development and refinement of clinical assessment instruments. Psychological Assessment, 7, 286-299.

- Fonseca, R. P., de Salles, J. F., & Parente, M. A. M. P. (2008). Development and content validity of the Brazilian Brief Neuropsychological Assessment Battery: NEUPSILIN. Psychology & Neuroscience, 1(1), 55-62.

- Freitas, S., Simões, M. R., Alves, L., Vicente, M., & Santana, I. (2012). Montreal Cognitive Assessment (MoCA): validation study for vascular dementia. Journal of the International Neuropsychological Society, 18(6), 1031-1040.

- Freitas, S., Simões, M. R., Marôco, J., Alves, L., & Santana, I. (2012). Construct validity of the Montreal Cognitive Assessment (MoCA). Journal of the International Neuropsychological Society, 18(2), 242-250.

- Fujiwara, Y., Suzuki, H., Yasunaga, M., Sugiyama, M., Ijuin, M., Sakuma, N., Inagaki, H., Iwasa, H., Ura, C., Yatomi, N., Ishii, K., Tokumaru, A. M., Homma, A., Nasreddine, Z., & Shinkai, S. (2010). Brief screening tool for mild cognitive impairment in older Japanese: validation of the Japanese version of the Montreal Cognitive Assessment. Geriatrics & Gerontology International, 10(3), 225-232.

- Gavett, B. E., Lou, K. R., Daneshvar, D. H., Green, R. C., Jefferson, A. L., & Stern, R. A. (2012). Diagnostic accuracy statistics for seven Neuropsychological Assessment Battery (NAB) test variables in the diagnosis of Alzheimer's disease. Applied Neuropsychology: Adult, 19(2), 108-115.

- Green, R. E., Colella, B., Hebert, D. A., Bayley, M., Kang, H. S., Till, C., & Monette, G. (2008). Prediction of return to productivity after severe traumatic brain injury: investigations of optimal neuropsychological tests and timing of assessment. Archives of Physical Medicine and Rehabilitation, 89(12 Suppl.), S51-S60.

- Gutzmann, H., Schmidt, K. H., Rapp, M. A., Rieckmann, N., & Folstein, M. F. (2005). MikroMental Test: Ein kurzes Verfahren zum Demenzscreening [Micro Mental State-Screening for Dementia with a Short Instrument]. Zeitschrift für Gerontopsychologie & Psychiatrie, 18(3), 115-119.

- Hanks, R. A., Millis, S. R., Ricker, J. H., Giacino, J. T., Nakese-Richardson, R., Frol, A. B., Novack, T. A., Kalmar, K., Sherer, M., & Gordon, W. A. (2008). The predictive validity of a brief inpatient neuropsychologic battery for persons with traumatic brain injury. Archives of Physical Medicine and Rehabilitation, 89(5), 950-957.

- Harrison, J., Minassian, S. L., Jenkins, L., Black, R. S., Koller, M., & Grundman, M. (2007). A neuropsychological test battery for use in Alzheimer disease clinical trials. Archives of Neurology, 64(9), 1323-1329.

- Harvey, P. D., Ferris, S. H., Cummings, J. L., Wesnes, K. A., Hsu, C., Lane, R. M., & Tekin, S. (2010). Evaluation of dementia rating scales in Parkinson's disease dementia. American Journal of Alzheimer's Disease and Other Dementias, 25(2), 142-148.

- Heo, J. H., Lee, K. M., Park, T. H., Ahn, J. Y., & Kim, M. K. (2012). Validation of the Korean Addenbrooke's Cognitive Examination for diagnosing Alzheimer's dementia and mild cognitive impairment in the Korean elderly. Applied Neuropsychology: Adult, 19(2), 127-131.

- Hobson, V. L., Hall, J. R., Humphreys-Clark, J. D., Schrimsher, G. W., & O'Bryant, S. E. (2010). Identifying functional impairment with scores from the repeatable battery for the assessment of neuropsychological status (RBANS). International Journal of Geriatric Psychiatry, 25(5), 525-530.

- Hoffmann, M., Schmitt, F., & Bromley, E. (2009). Comprehensive cognitive neurological assessment in stroke. Acta Neurologica Scandinavica, 119(3), 162-171.

- Holmén, A., Juuhl-Langseth, M., Thormodsen, R., Melle, I., & Rund, B. R. (2010). Neuropsychological profile in early-onset schizophrenia-spectrum disorders: measured with the MATRICS battery. Schizophrenia Bulletin, 36(4), 852-859.

- Hoops, S., Nazem, S., Siderowf, A. D., Duda, J. E., Xie, S. X., Stern, M. B., & Weintraub, D. (2009). Validity of the MoCA and MMSE in the detection of MCI and dementia in Parkinson disease. Neurology, 73(21), 1738-1745.

- Hunsley, J. (2009). Introduction to the special issue on developments in psychological measurement and assessment. Canadian Psychology/Psychologie Canadienne, 50(3), 117-119.

- Jones, R. N., Rudolph, J. L., Inouye, S. K., Yang, F. M., Fong, T. G., Milberg, W. P., Tommet, D., Metzger, E. D., Cupples, L. A., & Marcantonio, E.R. (2010). Development of a unidimensional composite measure of neuropsychological functioning in older cardiac surgery patients with good measurement precision. Journal of Clinical and Experimental Neuropsychology, 32(10), 1041-1049.

- Kaneda, Y., Sumiyoshi, T., Keefe, R., Ishimoto, Y., Numata, S., & Ohmori, T. (2007). Brief assessment of cognition in schizophrenia: validation of the Japanese version. Psychiatry and Clinical Neurosciences, 61(6), 602-609.

- Kelly, M. P., Coldren, R. L., Parish, R. V., Dretsch, M. N., & Russell, M. L. (2012). Assessment of acute concussion in the combat environment. Archives of Clinical Neuropsychology, 27(4), 375-388.

- Kessels, R. P. C., Mimpen, G., Melis, R., & Rikkert, M. G. M. O. (2009). Measuring impairments in memory and executive function in older people using the Revised Cambridge Cognitive Examination. American Journal of Geriatric Psychiatry, 17(9), 793-801.

- Konsztowicz, S., Xie, H., Higgins, J., Mayo, N., & Koski, L. (2011). Development of a method for quantifying cognitive ability in the elderly through adaptive test administration. International Psychogeriatrics, 23(7), 1116-1123.

- Kørner, A., Brogaard, A., Wissum, I., & Petersen, U. (2012). The Danish version of the Baylor Profound Mental State Examination. Nordic Journal of Psychiatry, 66(3), 198-202.

- Krigbaum, G., Amin, K., Virden, T. B., Baca, L., & Uribe, A. (2012). A pilot study of the sensitivity and specificity analysis of the standard-Spanish version of the culture-fair assessment of neurocognitive abilities and the Examen Cognoscitivo Mini-Mental in the Dominican Republic. Applied Neuropsychology: Adult, 19(1), 53-60.

- Kutlay, S., Kuçukdeveci, A.A., Elhan, A. H., Yavuzer, G., & Tennant, A. (2007). Validation of the Middlesex Elderly Assessment of Mental State (MEAMS) as a cognitive screening test in patients with acquired brain injury in Turkey. Disability and Rehabilitation, 29(4), 315-321.

- Larson, E., Kirschner, K., Bode, R., Heinemann, A., & Goodman, R. (2005). Construct and predictive validity of the Repeatable Battery for the Assessment of Neuropsychological Status in the evaluation of stroke patients. Journal of Clinical and Experimental Neuropsychology, 27(1), 16-32.

- Lee, J. Y., Dong, W. L., Cho, S. J., Na, D. L., Jeon, H. J., Kim, S. K., Lee, Y. R., Youn, J. H., Kwon, M., Lee, J. H., & Cho, M. J. (2008). Brief screening for mild cognitive impairment in elderly outpatient clinic: validation of the Korean version of the Montreal Cognitive Assessment. Journal of Geriatric Psychiatry and Neurology, 21(2), 104-110.

- Lewin, J. J., 3rd, LeDroux, S. N., Shermock, K. M., Thompson, C. B., Goodwin, H. E., Mirski, E. A., Gill, R. S., & Mirski, M. A. (2012). Validity and reliability of The Johns Hopkins Adapted Cognitive Exam for critically ill patients. Critical Care Medicine, 40(1), 139-144.

- Li, X., Xiao, Z., & Xiao, S. F. (2009). Reliability and validity of Chinese version of Alzheimer's Disease Assessment Scale-Cognitive Part. Chinese Journal of Clinical Psychology, 17(5), 538-540.

- Libon, D. J., Rascovsky, K., Gross, R. G., White, M. T., Xie, S. X., Dreyfuss, M., Boller, A., Massimo, L., Moore, P., Kitain, J., Coslett, H. B., Chatterjee, A., & Grossman, M. (2011). The Philadelphia Brief Assessment of Cognition (PBAC): a validated screening measure for dementia. Clinical Neuropsychologist, 25(8), 1314-1330.

- Liu, K. P., Kuo, M.C., Tang, K. C., Chau, A. W., Ho, I. H.., Kwok, M. P., Chan, W. C., Choi, R. H., Lam, N. C., Chu M. M., & Chu, L. W. (2011). Effects of age, education and gender in the Consortium to Establish a Registry for the Alzheimer's Disease (CERAD)-Neuropsychological Assessment Battery for Cantonese-speaking Chinese elders. International Psychogeriatrics, 23(10), 1575-1581.

- Lovell, M. R., & Solomon, G. S. (2011). Psychometric data for the NFL Neuropsychological Test Battery. Applied Neuropsychology, 18(3), 197-209.

- Lunardelli, A., Mengotti, P., Pesavento, A., Sverzut, A., & Zadini, A. (2009). The Brief Neuropsychological Screening (BNS): valuation of its clinical validity. European Journal of Physical and Rehabilitation Medicine, 45(1), 85-91.

- Mahoney, R., Johnston, K., Katona, C., Maxmin, K., & Livingston, G. (2005). The TE4D-Cog: a new test for detecting early dementia in English-speaking populations. International Journal of Geriatric Psychiatry, 20(12), 1172-1179.

- Mansbach, W. E., & MacDougall, E. E. (2012). Development and validation of the short form of the Brief Cognitive Assessment Tool (BCAT-SF). Aging & Mental Health, 16(8), 1065-1071.

- Mansbach, W. E., MacDougall, E. E., & Rosenzweig, A. S. (2012). The Brief Cognitive Assessment Tool (BCAT): a new test emphasizing contextual memory, executive functions, attentional capacity, and the prediction of instrumental activities of daily living. Journal of Clinical And Experimental Neuropsychology, 34(2), 183-194.

- Martínez-Martín, P., Frades-Payo, B., Rodríguez-Blázquez, C., Forjaz, M. J., & de Pedro-Cuesta, J. (2008). Atributos psicométricos de la Scales for Outcomes in Parkinson's Disease-Cognition (SCOPA-Cog), versión en Castellano. Revista de Neurología, 47(7), 337-343.

- Mate-Kole, C. C., Conway, J., Catayong, K., Bieu, R., Sackey, N. A., Wood, R., & Fellows, R. (2009). Validation of the Revised Quick Cognitive Screening Test. Archives of Physical Medicine and Rehabilitation, 90(9), 1469-1477.

- Mavioglu, H., Gedizlioglu, M., Akyel, S., Aslaner, T., & Eser, E. (2006). The validity and reliability of the Turkish version of Alzheimer's Disease Assessment Scale-Cognitive Subscale (ADAS-Cog) in patients with mild and moderate Alzheimer's disease and normal subjects. International Journal of Geriatric Psychiatry, 21(3), 259-265.

- McCrea, S. M. (2009). A review and empirical study of the composite scales of the Das-Naglieri cognitive assessment system. Psychology Research and Behavior Management, 2, 59-79.

- McKay, C., Casey, J. E., Wertheimer, J., & Fichtenberg, N. L. (2007). Reliability and validity of the RBANS in a traumatic brain injured sample. Archives of Clinical Neuropsychology, 22(1), 91-98.

- McKay, C., Wertheimer, J. C., Fichtenberg, N. L., & Casey, J. E. (2008). The Repeatable Battery for The Assessment of Neuropsychological Status (RBANS): clinical utility in a traumatic brain injury sample. Clinical Neuropsychologist, 22(2), 228-241.

- McLennan, S. N., Mathias, J. L., Brennan, L. C., & Stewart, S. (2011). Validity of the Montreal Cognitive Assessment (MoCA) as a screening test for mild cognitive impairment (MCI) in a cardiovascular population. Journal of Geriatric Psychiatry and Neurology, 24(1), 33-38.

- Mesbah, M., Grass-Kapanke, B., & Ihl, R. (2008). Treatment Target Test Dementia (3TD). International Journal of Geriatric Psychiatry, 23(12), 1239-1244.

- Miller, J. B., Fichtenberg, N.L., & Millis, S. R. (2010). Diagnostic efficiency of an ability-focused battery. Clinical Neuropsychologist, 24(4), 678-688.

- Milman, L. H., Holland, A., Kaszniak, A. W., D'Agostino, J., Garrett, M., & Rapcsak, S. (2008). Initial validity and reliability of the SCCAN: using tailored testing to assess adult cognition and communication. Journal of Speech, Language, and Hearing Research, 51(1), 49-69.

- Mioshi, E., Dawson, K., Mitchell, J., Arnold, R., & Hodges, J. R. (2006). The Addenbrooke's Cognitive Examination Revised (ACE-R): a brief cognitive test battery for dementia screening. International Journal of Geriatric Psychiatry, 21(11), 1078-1085.

- Monllau, A., Pena-Casanova, J., Blesa, R., Aguilar, M., Bohm, P., Sol, J. M., & Hernandez, G. (2007). Valor diagnóstico y correlaciones funcionales de la escala ADAS-Cog en la enfermedad de Alzheimer: datos del proyecto NORMACODEM. Neurología, 22(8), 493-501.

- Morris, K., Hacker, V., & Lincoln, N. (2012). The validity of the Addenbrooke's Cognitive Examination-Revised (ACE-R) in acute stroke. Disability And Rehabilitation, 34(3), 189-195.

- Mungas, D., Reed, B. R., Haan, M.N., & González, H. (2005a). Spanish and English neuropsychological assessment scales: relationship to demographics, language, cognition, and independent function. Neuropsychology, 19(4), 466-475.

- Mungas, D., Reed, B. R., Tomaszewski Farias, S., & DeCarli, C. (2005b). Criterion-referenced validity of a neuropsychological test battery: equivalent performance in elderly Hispanics and non-Hispanic whites. Journal of the International Neuropsychological Society, 11(5), 620-630.

- Mystakidou, K., Tsilika, E., Parpa, E., Galanos, A., & Vlahos, L. (2007). Brief cognitive assessment of cancer patients: evaluation of the Mini-Mental State Examination (MMSE) psychometric properties. Psycho-Oncology, 16(4), 352-357.

- Nampijja, M., Apule, B., Lule, S., Akurut, H., Muhangi, L., Elliott, A. M., & Alcock, K. J. (2010). Adaptation of Western measures of cognition for assessing 5-year-old semi-urban Ugandan children. British Journal of Educational Psychology, 80(Pt 1), 15-30.

- Nie, K., Zhang, Y., Wang, L., Zhao, J., Huang, Z., Gan, R., Li, S., & Wang, L. (2012). A pilot study of psychometric properties of the Beijing version of Montreal Cognitive Assessment in patients with idiopathic Parkinson's disease in China. Journal of Clinical Neuroscience, 19(11), 1497-1500.

- Nøkleby, K., Boland, E., Bergersen, H., Schanke, A. K., Farner, L., Wagle, J., & Wyller, T. B. (2008). Screening for cognitive deficits after stroke: a comparison of three screening tools. Clinical Rehabilitation, 22(12), 1095-1104.

- Nunes, P. V., Diniz, B.S., Radanovic, M., Abreu, I. D., Borelli, D. T., Yassuda, M. S., & Forlenza, O. V. (2008). CAMcog as a screening tool for diagnosis of mild cognitive impairment and dementia in a Brazilian clinical sample of moderate to high education. International Journal of Geriatric Psychiatry, 23(11), 1127-1133.

- Paajanen, T., Hänninen, T., Tunnard, C., Mecocci, P., Sobow, T., Tsolaki, M., Vellas, B., Lovestone, S., & Soininen, H. (2010). CERAD Neuropsychological Battery total score in multinational mild cognitive impairment and control populations: the AddNeuroMed study. Journal of Alzheimer's Disease, 22(4), 1089-1097.

- Pachet, A. K. (2007). Construct validity of the Repeatable Battery of Neuropsychological Status (RBANS) with acquired brain injury patients. Clinical Neuropsychologist, 21(2), 286-293.

- Park, L. Q., Gross, A. L., McLaren, D. G., Pa, J., Johnson, J. K., Mitchell, M., & Manly, J. J. (2012). Confirmatory factor analysis of the ADNI Neuropsychological Battery. Brain Imaging and Behavior, 6(4), 528-539.

- Parmenter, B. A., Testa, S. M., Schretlen, D. J., Weinstock-Guttman, B., & Benedict, R. H. B. (2010). The utility of regression-based norms in interpreting the minimal assessment of cognitive function in multiple sclerosis (MACFIMS). Journal of the International Neuropsychological Society, 16(1), 6-16.

- Pasquali, L. (2010). Instrumentação psicológica: fundamentos e prática Porto Alegre: Artmed.

- Pawlowski, J., Fonseca, R. P., Salles, J. F., Parente, M. A. M. P., & Bandeira, D. R. (2008). Evidências de validade do Instrumento de Avaliação Neuropsicológica Breve NEUPSILIN. Arquivos Brasileiros de Psicologia, 60(2), 101-116.

- Pawlowski, J., Trentini, C. M., & Bandeira, D. R. (2007). Discutindo procedimentos psicométricos a partir da análise de um instrumento de avaliação neuropsicológica breve. Psico-USF, 12(2), 211-219.

- Pedraza, O., Graff-Radford, N. R., Smith, G. E., Ivnik, R. J., Willis, F. B., Petersen, R. C., & Lucas, J. A. (2009). Differential item functioning of the Boston Naming Test in cognitively normal African American and Caucasian older adults. Journal of the International Neuropsychological Society, 15, 758-768.

- Pedraza, O., Lucas, J. A., Smith, G. E., Willis, F. B., Graff-Radford, N. R., Ferman, T. J., Petersen, R. C., Bowers, D., & Ivnik, R. J. (2005). Mayo's Older African American Normative Studies: confirmatory factor analysis of a core battery. Journal of the International Neuropsychological Society, 11(2), 184-191.

- Pietrzak, R. H., Maruff, P., Mayes, L. C., Roman, S. A., Sosa, J. A., & Snyder, P. J. (2008). An examination of the construct validity and factor structure of the Groton Maze Learning Test, a new measure of spatial working memory, learning efficiency, and error monitoring. Archives of Clinical Neuropsychology, 23(4), 433-445.

- Pike, K. E., Rowe, C. C., Moss, S. A., & Savage, G. (2008). Memory profiling with paired associate learning in Alzheimer's disease, mild cognitive impairment, and healthy aging. Neuropsychology, 22(6), 718-728.

- Pirani, A., Brodaty, H., Martini, E., Zaccherini, D., Neviani, F., & Neri, M. (2010). The validation of the Italian version of the GPCOG (GPCOG-It): a contribution to cross-national implementation of a screening test for dementia in general practice. International Psychogeriatrics, 22(1), 82-90.

- Reitan, R. M., & Wolfson, D. (2008). Serial testing of older children as a basis for recommending comprehensive neuropsychological evaluation. Applied Neuropsychology, 15(1), 11-20.

- Reyes, M. A., Perez-Lloret, S., Roldan Gerschcovich, E., Martin, M. E., Leiguarda, R., & Merello, M. (2009). Addenbrooke's Cognitive Examination validation in Parkinson's disease. European Journal of Neurology, 16(1), 142-147.

- Rösche, J., Schley, A., & Benecke, R. (2012). Neuropsychologisches screening in einer epileptologischen spezial-sprechstunde: ergebnisse und klinische bedeutung [Neuropsychological screening in an out-patient epilepsy clinic: results and clinical significance]. Nervenheilkunde: Zeitschrift für Interdisziplinaere Fortbildung, 31(3), 171-174.

- Rossetti, H. C., Munro Cullum, C., Hynan, L. S., & Lacritz, L. H. (2010). The CERAD neuropsychiatric battery total score and the progression of Alzheimer disease. Alzheimer Disease and Associated Disorders, 24(2), 138-142.

- Sanz, J. C., Vargas, M. L., & Marín, J. J. (2009). Battery for assessment of neuropsychological status (RBANS) in schizophrenia: a pilot study in the Spanish population. Acta Neuropsychiatrica, 21(1), 18-25.

- Schmidt, K. S., Lieto, J. M., Kiryankova, E., & Salvucci, A. (2006). Construct and concurrent validity of the Dementia Rating Scale-2 Alternate Form. Journal of Clinical and Experimental Neuropsychology, 28(5), 646-654.

- Schmitt, A. L., Livingston, R. B., Smernoff, E. N., Reese, E. M., Hafer, D. G., & Harris, J. B. (2010). Factor analysis of the Repeatable Battery for the Assessment of Neuropsychological Status (RBANS) in a large sample of patients suspected of dementia. Applied Neuropsychology, 17(1), 8-17.

- Schneider, L. S., Raman, R., Schmitt, F. A., Doody, R. S., Insel, P., Clark, C. M., Morris, J. C., Reisberg, B., Petersen, R. C., & Ferris, S. H. (2009). Characteristics and performance of a modified version of the ADCS-CGIC CIBIC+ for mild cognitive impairment clinical trials. Alzheimer Disease and Associated Disorders, 23(3), 260-267.

- Schofield, P. W., Lee, S. J., Lewin, T. J., Lyall, G., Moyle, J., Attia, J., & McEvoy, M. (2010). The Audio Recorded Cognitive Screen (ARCS): a flexible hybrid cognitive test instrument. Journal of Neurology, Neurosurgery & Psychiatry, 81(6), 602-607.

- Schultz-Larsen, K., Kreiner, S., & Lomholt, R. K. (2007). Minimental status examination: Mixed Rasch model item analysis derived two different cognitive dimensions of the MMSE. Journal of Clinical Epidemiology, 60, 268-279.

- Segarra, N., Bernardo, M., Gutierrez, F., Justicia, A., Fernadez-Egea, E., Allas, M., Safont, G., Contreras, F., Gascon, J., Soler-Insa, P. A., Menchon, J. M., Junque, C., & Keefe, R. (2011). Spanish validation of the Brief Assessment in Cognition in Schizophrenia (BACS) in patients with schizophrenia and healthy controls. European Psychiatry, 26(2), 69-73.

- Seo, E. H., Lee, D. Y., Lee, J. H., Choo, I. H., Kim, J. W., Kim, S. G., Park, S. Y., Shin, J. H., Do, Y. J., Yoon, J. C., Jhoo, J. H., Kim, K. W., & Woo, J. I. (2010). Total scores of the CERAD neuropsychological assessment battery: validation for mild cognitive impairment and dementia patients with diverse etiologies. American Journal of Geriatric Psychiatry, 18(9), 801-809.

- Skinner, J., Carvalho, J. O., Potter, G. G., Thames, A., Zelinski, E., Crane, P. K., & Gibbons, L. E. (2012). The Alzheimer's Disease Assessment Scale-Cognitive-Plus (ADAS-Cog-Plus): an expansion of the ADAS-Cog to improve responsiveness in MCI. Brain Imaging and Behavior, 6(4), 489-501.

- Smerbeck, A. M., Parrish, J., Yeh, E. A., Weinstock-Guttman, B., Hoogs, M., Serafin, D., Krupp, L., & Benedict, R. H. (2012). Regression-based norms improve the sensitivity of the National MS Society Consensus Neuropsychological Battery for Pediatric Multiple Sclerosis (NBPMS). Clinical Neuropsychologist, 26(6), 985-1002.

- Smith, T., Gildeh, N., & Holmes, C. (2007). The Montreal Cognitive Assessment: validity and utility in a memory clinic setting. Canadian Journal of Psychiatry, 52(5), 329-332.

- Sosa, A. L., Albanese, E., Prince, M., Acosta, D., Ferri, C. P., Guerra, M., Huang, Y., Jacob, K. S., de Rodriguez, J. L., Salas, A., Yang, F., Gaona, C., Joteeshwaran, A., Rodriguez, G., de la Torre, G. R., Williams, J. D., & Stewart, R. (2009). Population normative data for the 1066 Dementia Research Group cognitive test battery from Latin America, India and China: a cross-sectional survey. BMC Neurology, 9, 48.

- Strober, L., Englert, J., Munschauer, F., Weinstock-Guttman, B., Rao, S., & Benedict, R. H. (2009). Sensitivity of conventional memory tests in multiple sclerosis: comparing the Rao Brief Repeatable Neuropsychological Battery and the Minimal Assessment of Cognitive Function in MS. Multiple Sclerosis, 15(9), 1077-1084.

- Suh, G. H., & Kang, C. J. (2006). Validation of the Severe Impairment Battery for patients with Alzheimer's disease in Korea. International Journal of Geriatric Psychiatry, 21(7), 626-632.

- Temple, R. O., Zgaljardic, D. J., Abreu, B. C., Seale, G. S., Ostir, G. V., & Ottenbacher, K. J. (2009). Ecological validity of the Neuropsychological Assessment Battery Screening Module in post-acute brain injury rehabilitation. Brain Injury, 23(1), 45-50.

- Thissen, A. J., van Bergen, F., de Jonghe, J. F., Kessels, R. P., & Dautzenberg, P. L. (2010). [Applicability and validity of the Dutch version of the Montreal Cognitive Assessment (moCA-d) in diagnosing MCI]. Tijdschrift Voor Gerontologie En Geriatrie, 41(6), 231-240.

- Thomas, M. L. (2011). The value of Item Response Theory in clinical assessment: a review. Assessment, 18(3), 291-307.

- Trenkle, D. L., Shankle, W. R., & Azen, S. P. (2007). Detecting cognitive impairment in primary care: performance assessment of three screening instruments. Journal of Alzheimer's Disease, 11(3), 323-335.

- Urbina, S. (2004). Essentials of psychological testing Hoboken, N.J.: Wiley.

- Villeneuve, S., Pepin, V., Rahayel, S., Bertrand, J.A., de Lorimier, M., Rizk, A., Desjardins, C., Parenteau, S., Beaucage, F., Joncas, S., Monchi, O., & Gagnon, J.F. (2012). Mild cognitive impairment in moderate to severe COPD: a preliminary study. Chest, 142(6), 1516-1523.

- Walterfang, M., Choi, Y., O'Brien, T. J., Cordy, N., Yerra, R., Adams, S., & Velakoulis, D. (2011). Utility and validity of a brief cognitive assessment tool in patients with epileptic and nonepileptic seizures. Epilepsy & Behavior, 21(2), 177-183.

- Walterfang, M., Siu, R., & Velakoulis, D. (2006). The NUCOG: validity and reliability of a brief cognitive screening tool in neuropsychiatric patients. Australian and New Zealand Journal of Psychiatry, 40(11-12), 995-1002.

- Wege, N., Dlugaj, M., Siegrist, J., Dragano, N., Erbel, R., Jöckel, K. H., Moebus, S., & Weimar, C. (2011). Population-based distribution and psychometric properties of a short cognitive performance measure in the population-based Heinz Nixdorf Recall Study. Neuroepidemiology, 37(1), 13-20.

- Westen, D., & Rosenthal, R. (2003). Quantifying construct validity: two simple measures. Journal of Personality and Social Psychology, 84(3), 608-618.

- Wilde, M. C. (2006). The validity of the Repeatable Battery of Neuropsychological Status in acute stroke. Clinical Neuropsychologist, 20(4), 702-715.

- Wilde, M. C. (2010). Lesion location and repeatable battery for the assessment of neuropsychological status performance in acute ischemic stroke. Clinical Neuropsychologist, 24(1), 57-69.

- Wolfs, C. A., Dirksen, C. D., Kessels, A., Willems, D. C., Verhey, F. R., & Severens, J. L. (2007). Performance of the EQ-5D and the EQ-5D+C in elderly patients with cognitive impairments. Health and Quality of Life Outcomes, 5, 33.

- Wong, A., Xiong, Y. Y., Kwan, P. W., Chan, A. Y., Lam, W. W., Wang, K., Chu, W. C., Nyenhuis, D. L., Nasreddine, Z., Wong, L. K., & Mok, V. C. (2009). The validity, reliability and clinical utility of the Hong Kong Montreal Cognitive Assessment (HK-MoCA) in patients with cerebral small vessel disease. Dementia and Geriatric Cognitive Disorders, 28(1), 81-87.

- Yang, G. G., Tian, J., Tan, Y. L., Wang, Z. R., Zhang, X. Y., Zhang, W. F., Zheng, L. L., Zhou, Y., Wang, Y. C., Li, J., Wu, Z. Q., & Zhou, D. F. (2010). The application of the Repeatable Battery for the Assessment of Neuropsychological Status among normal persons in Beijing. Chinese Mental Health Journal, 24(12), 926-931.

- Yoshida, H., Terada, S., Honda, H., Ata, T., Takeda, N., Kishimoto, Y., Oshima, E., Ishihara, T., & Kuroda, S. (2011). Validation of Addenbrooke's cognitive examination for detecting early dementia in a Japanese population. Psychiatry Research, 185(1-2), 211-214.

- Zgaljardic, D. J., & Temple, R. O. (2010a). Neuropsychological Assessment Battery (NAB): performance in a sample of patients with moderate-to-severe traumatic brain injury. Applied Neuropsychology, 17(4), 283-288.

- Zgaljardic, D. J., & Temple, R. O. (2010b). Reliability and validity of the Neuropsychological Assessment Battery-Screening Module (NAB-SM) in a sample of patients with moderate-to-severe acquired brain injury. Applied Neuropsychology, 17(1), 27-36.

- Zgaljardic, D. J., Yancy, S., Temple, R. O., Watford, M. F., & Miller, R. (2011). Ecological validity of the screening module and the Daily Living tests of the Neuropsychological Assessment Battery using the Mayo-Portland Adaptability Inventory-4 in postacute brain injury rehabilitation. Rehabilitation Psychology, 56(4), 359-365.

- Zhang, B. H., Tan, Y. L., Zhang, W. F., Wang, Z. R., Yang, G. G., Shi, C., Zhang, X. Y., & Zhou, D. F. (2008). Repeatable battery for the assessment of neuropsychological status as a screening test in Chinese: reliability and validity. Chinese Mental Health Journal, 22(12), 865-869.

- Zhou, A., & Jia, J. (2008). The value of the clock drawing test and the mini-mental state examination for identifying vascular cognitive impairment no dementia. International Journal of Geriatric Psychiatry, 23(4), 422-426.

- Zhou, A., & Jia, J. (2009). A screen for cognitive assessments for patients with vascular cognitive impairment no dementia. International Journal of Geriatric Psychiatry, 24(12), 1352-1357.

Publication Dates

-

Publication in this collection

28 Feb 2014 -

Date of issue

Dec 2013

History

-

Received

13 Nov 2012 -

Accepted

24 Sept 2013 -

Reviewed

23 Sept 2013