ABSTRACT

This essay examines how p-hacking and HARKing compromise integrity in management research. Competitive pressures and the “publish or perish” culture often foster questionable practices, resulting in distorted findings and low reproducibility. The discussion critiques overreliance on p-values and neglect of effect sizes and proposes solutions through transparency, preregistration, and open science. Systemic reforms are advocated to strengthen reliability, promote responsible science, and enhance the societal relevance of scientific contributions.

Keywords:

HARKing; management research; p-hacking; questionable research; research ethics.

RESUMO

Esta pensata examina como o p-hacking e o HARKing comprometem a integridade na pesquisa em gestão. Pressões competitivas e a cultura do “publicar ou perecer” incentivam práticas questionáveis, levando a achados distorcidos e baixa reprodutibilidade. A discussão critica a dependência excessiva do p-valor, a negligência dos tamanhos de efeito e propõe soluções por meio de transparência, pré-registro e ciência aberta. Defendem-se reformas sistêmicas para fortalecer a confiabilidade e promover uma ciência responsável, com maior impacto social e relevância científica.

Palavras-chave:

ética em pesquisa; HARKing; pesquisa em Administração; pesquisa questionável; p-hacking.

RESUMEN

Este ensayo examina cómo el p-hacking y el HARKing comprometen la integridad de la investigación en gestión. Las presiones competitivas y la cultura de «publicar o perecer» fomentan prácticas cuestionables, lo que conduce a hallazgos distorsionados y a una baja reproducibilidad. Asimismo, el ensayo critica la dependencia excesiva del valor p, la negligencia de los tamaños del efecto y propone soluciones a través de la transparencia, el prerregistro y la ciencia abierta. Se defienden reformas sistémicas para fortalecer la fiabilidad y promover una ciencia responsable, con mayor impacto social y relevancia científica.

Palabras clave

HARKing; investigación en gestión; p-hacking; investigación cuestionable; ética en la investigación.

INTRODUCTION

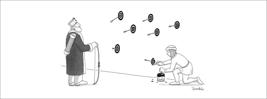

Questionable Research Practices (QRPs) refer to research behaviors that do not constitute outright scientific misconduct but compromise the integrity and validity of research findings (Ioannidis, 2022; National Academies of Sciences, Engineering, and Medicine, 2017). QRPs encompass practices that deviate from established ethical standards and accepted norms of scientific conduct, potentially yielding misleading or non-reproducible results (Kepes et al., 2022). The main QRPs include selective reporting, data dredging, failure to report all study conditions, inadequate documentation of methods, lack of transparency, excessive post hoc analyses, poorly justified sample sizes, p-hacking, and harking. We examine the impact of statistical methods on management research, highlighting the pressures and challenges they pose and their influence on scientific progress. In scientific research, practices such as HARKing (Hypothesizing After the Results are Known) represent an often invisible but harmful ethical and methodological deviation. Figure 1 illustrates HARKing, where the researcher aims at the target (results) before drawing the hypotheses (cross-target), thereby inverting conventional scientific logic. This metaphor illustrates the process by which hypotheses are reshaped post hoc to fit desirable findings.

Integrity is essential, and statistical methods convert observations into numerical data (Huang & Wang, 2023). Despite pressures such as competitiveness, social shifts, and rapid information dissemination (Zhao et al., 2021), effective changes are imperative (Wood et al., 2022). Nevertheless, unreliable information persists due to QRPs, where self-interest results in fabricated results that undermine journal credibility (Aguinis et al., 2020).

High-impact journal competition rigorous research, at, while simultaneously promoting rigorous unethical behavior, thereby threatening credibility (Hudson, 2024; Nair & Ascani, 2022). The "publish or perish" pressure (Dalen, 2021; Tsui, 2022) has constrained scientific freedom and responsibility. While science seeks truth (Popper, 2017), editorial decisions prioritize research relevance and methodological rigor over the significance of results (Promoting reproducibility with registered reports, 2017).

Some QRPs submit compelling findings that appeal to reviewers, compromising integrity (Bruton et al., 2020). This pressure creates dilemmas involving editor expectations, competition, and institutional demands (Bouter, 2023) and weakens credibility (Stefan & Schönbrodt, 2023). QRPs, such as p-hacking and HARKing, involve manipulating hypotheses and statistical adjustments, thereby distorting findings (Kepes et al., 2022; Syrjänen, 2023). Controlling for confounding variables can also bias outcomes (Speicher, 2021). Statistical significance, typically evaluated using a p-value below 0.05, denotes the probability that the results are not due to chance (Schwab & Starbuck, 2024). However, overemphasising this threshold can further distort the scientific literature (Amrhein et al., 2019).

Reproducibility is crucial for scientific progress, but it faces challenges, including theoretical clarity, substantive importance, and statistical significance (Moody et al., 2022). Poor data management poses a threat to the reliability (Bouter, 2023). Surveys reveal widespread QRPs: many Pakistani academics admitted to engaging in them (Fahim et al., 2024), and 40% of Norwegian researchers admitted to them (Kaiser et al., 2022). Bottesini et al. (2022) reported that 74% of researchers rejected P-hacking and HARKing, favoring transparency. How can we ensure that our research practices in management are rigorous and ethical, contribute to advancing knowledge, and avoid pitfalls such as P-hacking and HARKing? This essay examines these QRPs and discusses the consequences of unethical actions on scientific integrity in management.

P-HACKING

P-hacking undermines research credibility by manipulating analyses to achieve statistical significance (Elliott et al., 2022). It distorts findings and leads to misguided policies and decisions (Guo & Ma, 2022). QRPs adjust methods, add controls, or remove outliers post hoc without theoretical justification, artificially inflating statistically significant variables (Syrjänen, 2023). By altering statistical tests, researchers present non-significant results as if they were meaningful (Stefan & Schönbrodt, 2023). Extremely low p-values via P-hacking are rare but widespread (Bruns & Ioannidis, 2016). The traditional frequentist approach, where a p-value below 0.05 is considered significant, often encourages researchers to manipulate their data to reach this cutoff (Stefan & Schönbrodt, 2023).

Bayesian methods provide a complementary alternative to traditional significance testing by incorporating prior knowledge and expressing results as probability distributions (Kelter, 2020). Bayesian inference is often viewed as a complementary approach to fixed p-value thresholds, enabling belief updating with new data and facilitating more nuanced interpretations (Pereira & Stern, 2022). Even well-regarded methods, such as Bayesian techniques, may be vulnerable to misuse in the absence of transparency and replicability, reinforcing the importance of ethical commitment and open science (OS) practices across all approaches.

In Bayesian statistics, risks such as poorly specified priors and limited replication can affect the reliability of findings, especially in small samples or complex models (Banner et al., 2020; Ni & Sun, 2003; Röver et al., 2021; Smid & Winter, 2020). These concerns highlight the importance of transparent reporting, careful prior selection, and thorough model checking. Since all priors reflect subjective choices, inadequate justification or technical limitations during implementation may lead to misunderstandings or hinder reproducibility (Banner et al., 2020; Merkle et al., 2023). However, it is worth emphasizing that Bayesian approaches do not guarantee immunity against misuse; without transparency and rigor, they can also be manipulated, for example, through the selection of inadequate priors. When prior specifications are not clearly communicated, replicating Bayesian analyses becomes more difficult, increasing the risk of irreproducible results (Depaoli & Schoot, 2017). Some authors propose Bayesian approaches to mitigate the excessive emphasis on p-values; however, it is crucial to note that, alone, no statistical method prevents questionable practices.

Research integrity depends more on theethics of researchers than on methods, since institutional incentives often shape transparency more than statistical tools (Nosek et al., 2015). As noted by Kelter (2020) and Sijtsma (2016), biased priors in Bayesian models and overfitting in frequentist regressions can both compromise results. Preregistration, where researchers define hypotheses and methods before data collection, enhances transparency and reduces reporting biases (Bruton et al., 2020; Promoting reproducibility with registered reports, 2017). OS promotes credibility through open access, data sharing, and transparent peer review (Martins & Mendes-da-Silva, 2024). Journals adopting OS practices can reduce publication bias and P-hacking (Brodeur et al., 2024). Research integrity requires rigorous study design, replication, statistical training, and expert collaboration (Sijtsma et al., 2016).

The consequences of p-hacking extend to management research, resulting in unreliable conclusions and wasted resources (Ioannidis, 2022). The emphasis on p-values over replication hinders scientific progress (Barth & Lourenço, 2020). Empirical evidence reveals its prevalence: 42% of researchers admitted collecting extra data to achieve statistical significance (Fraser et al., 2018), whereas 88% of Italian researchers admitted at least one QRP (Agnoli et al., 2017). Transparent practices, OS, and ethics education are essential to mitigate QRPs.

Harlow et al. (1997) emphasize the importance of reporting statistical power, particularly in small-sample studies that are prone to Type II errors. Effect size, rather than p-values alone, provides a more substantive interpretation of findings. Overreliance on statistical significance often leads to weak conclusions that lack scientific relevance. Replication strengthens theoretical development, and scientific credibility remains underused due to editorial barriers and limited awareness among researchers (Harlow et al., 1997).

While p-hacking threatens research integrity through statistical manipulation, it often occurs in conjunction th HARKing, thereby compounding the challenge of maintaining scientific rigor. Researchers in p-hacking may adjust hypotheses to match significant findings (Murphy & Aguinis, 2019). These practices compromise statistical analysis and the theoretical framework, producing publishable results (Kepes et al., 2022). Examining HARKing reveals how these practices undermine scientific inquiry in management research.

HARKING

HARKing occurs when researchers formulate hypotheses post hoc, presenting them as pre-planned (Kerr, 1998). This misrepresentation undermines research integrity, leading to the development of misleading policies and harming the replicability of findings (Limongi, 2024). Tailoring hypotheses to data rather than objectively testing them distorts scientific evidence and reduces reliability (Baruch, 2023). To maintain credibility, hypothesis formulation and data analysis must remain separate.

Transparency in methods and preregistration is crucial for distinguishing between credible and biased studies. HARKing falsely implies that hypotheses preceded data collection, prioritizing significant findings over null results and reducing generalizability (Corneille et al., 2023). Combating this requires preregistering hypotheses and fully disclosing analytical processes. Reviewers and editors play a key role in enforcing transparency and preventing biased reporting (Blincoe & Buchert, 2020). These measures also address the replication crisis (Balafoutas et al., 2025).

Studies on misconduct have indicated the prevalence of QRPs. Up to 34% of researchers admitted engaging in at least one QRP (Fanelli, 2009), and surveys of U.S. psychologists have shown widespread HARKing and post-hoc data collection (John et al., 2012). Similarly, 51% of researchers reported unexpected findings as pre-planned hypotheses (Fraser et al., 2018). This practice inflates effect sizes, distorts the literature, and weakens theoretical development (Murphy & Aguinis, 2019). In management research, HARKing erodes credibility, hinders replication, and widens the gap between academia and practice (Baruch, 2023; Kepes et al., 2022).

The interplay between p-hacking and HARKing creates a pervasive web of QRPs that systematically undermines research credibility in management (Murphy & Aguinis, 2019). These practices distort findings and generate cascading effects: unreliable meta-analyses, hindered theory development, and a widening gap between academic research and practice (Kepes et al., 2022). Combined with publication pressure and the “publish or perish” culture (Dalen, 2021), these threats undermine the foundations of scientific inquiry. As Ioannidis (2005) warns, such accumulation of questionable findings may render most published research false. Addressing this critical juncture requires ethical commitment and systemic reform (Bruton et al., 2020).

Building on the previous discussion, the interplay between HARKing and p-hacking not only distorts scientific findings but also reveals deeper systemic flaws that extend beyond individual misconduct. Therefore, moving from diagnostic critique to actionable reforms becomes essential to restore scientific credibility and reinforce research integrity in the field of management.

ENHANCING INTEGRITY AND TRANSPARENCY IN RESEARCH

HARKing and p-hacking are often discussed within the hypothetic-deductive paradigm, where hypotheses precede data analysis. However, post hoc model modifications are underexplored, involving adjustments made to the model after analysis to fit the data. While some improve fit, transparency, and justification are crucial for integrity. Structural factors influence QRPs, including a preference for novel results (neophilia), the file-drawer effect, and editorial pressure for significant findings (Kline, 2023). Misusing p-values and ignoring effect sizes contribute to these distortions. Integrating effect sizes, confidence intervals, and power analyses can enhance research reliability and mitigate such issues.

Addressing QRPs requires systemic reforms, such as the publication of null results, transparent analyses, and editorial standards that emphasize replication. Sarstedt and Adler (2023) proposed OS practices, such as registration, data sharing, and Bayesian methods, to reduce overreliance on p-values. They also highlighted the role of training, statistical tools (e.g., “statcheck” and the “GRIM test”), and ethical education in detecting and preventing data manipulation.

To prevent overfitting and artificial improvements, post-hoc model modifications must be limited in confirmatory factor analysis. Excessive adjustments, such as the removal of measured variables, require theoretical justification and validation with new samples (Hair et al., 2014). Major modifications often indicate overfitting, producing sample-specific, non-generalizable models (Adler et al., 2023). Preregistration and OS practices help mitigate overfitting risks by ensuring transparency in modifications. Researchers must document changes, provide theoretical justification, and validate revised models using new data to ensure their accuracy and reliability. When unexpected findings require respect, transparent reporting minimizes bias and enhances model validity (Page et al., 2018). Platforms such as the Open Science Framework (OSF) facilitate tracking modifications, distinguishing between planned and data-driven adjustments.

The replication crisis in psychology has exposed systemic issues in academic research (Open Science Collaboration, 2015). High-profile cases, such as failed replications of Daryl Bem’s extrasensory perception study, illustrate how inadequate practices distort scientific credibility (Galak et al., 2012). Books such as Science Fiction (Ritchie, 2020) and The Seven Deadly Sins of Psychology (Chambers, 2017) explore the broader structural factors that sustain these issues.

In scientific practice, many methodologically weak or irrelevant findings have gone unnoticed for years, whereas highly visible studies quickly become the focus of replication attempts and public scrutiny. This post-publication dynamic plays a vital role in scientific validation, as illustrated by the case of Daryl Bem’s extrasensory perception study (Galak et al., 2012), whose results were widely contested and failed to replicate. Addressing these challenges requires methodological rigor, transparency, and systemic reforms in research practice. Although peer reviewers act as a quality control filter, their role is not always sufficient to prevent the publication of studies with QRPs, especially when such studies align with dominant narratives or present 'interesting' findings.

Promoting integrity and transparency in research also requires a broader theoretical reflection on how scientific credibility is socially constructed and sustained. Scientific credibility is not just a technical fact, but is socially constructed through norms, justifications, and transparency practices, which require theoretical and community reflection (Banner et al., 2020; Depaoli & Schoot, 2017; Merkle et al., 2023). The peer review system, although essential, cannot be conceived as a neutral or infallible validation mechanism (Atherton et al., 2024). It does not operate neutrally, but within a web of socially constructed judgments, where factors such as institutional prestige, thematic familiarity, and evaluator diversity influence which studies gain prominence, are replicated, or remain marginal to scientific debate.

The scientific community plays a dual role: gatekeeping before publication and filtering what becomes accepted knowledge afterward. Correcting errors and exposing QRPs often relies more on the visibility of findings than on formal procedures. Thus, promoting responsible science requires not just technical fixes but also a systemic reconfiguration of how trust, accountability, and epistemic legitimacy are distributed. This asymmetry highlights a central paradox in the process of scientific validation. Studies with low visibility, even if marked by methodological weaknesses or the use of questionable practices, gain notoriety; on the other hand, studies with significant repercussions become the target of replication and criticism only after they have achieved widespread dissemination (Merkle et al., 2023). Research on transformational leadership, organizational behavior, and motivation strategies, for example, has been criticized for its methodological soundness and the robustness of the findings (Hensel, 2021).

Scientific scrutiny often depends on perceived impact, not just methodological quality. Allied to this, the editorial preference for statistically significant results and innovative narratives reinforces a logic of visibility that can obscure rigorous evaluation, as Depaoli and Schoot (2017) and Banner et al. (2020) point out. Therefore, strengthening scientific integrity requires mechanisms that promote critical review and replication, regardless of the prestige or appeal of the findings.

REFLECTION, CRITICISM, AND RECOMMENDATIONS

QRPs significantly impact scientific progress by reducing research reproducibility and credibility (Ioannidis, 2005). HARKing and p-hacking undermine the reliability of the literature, mislead policymakers, and waste resources on false-positive findings (Nosek et al., 2015). These practices erode public trust, which, in turn, affects funding and the role of science as a reliable source of knowledge. Additionally, they compromise meta-analyses and systematic reviews by distorting aggregated results (Simmons et al., 2011).

Addressing QRPs such as p-hacking and HARKing requires systemic changes, including transparency, preregistration, and open data sharing. Adler et al. (2023) emphasized the importance of structured preregistration, which covers the study design, analysis methods, and reporting deviations. Such frameworks enhance replicability and accountability, particularly for PLS-SEM. However, academia's emphasis on publication quantity over quality fosters QRPs, showing the need for ethics education in graduate programs (Simmons et al., 2011). Integrating research integrity courses can instill responsible practices and mitigate methodological distortions (Honig et al., 2018).

Platforms such as OSF and AsPredicted support transparency by enabling preregistration and distinguishing exploratory from confirmatory analyses. Graduate curricula should incorporate hands-on workshops to demonstrate these tools and foster a culture of OS (Limongi & Marcolin, 2024). Transparent reporting, including justifications for model modifications, prevents overfitting and ensures theoretical consistency (Adler et al., 2023). The replication crisis highlights the need for such reforms (Open Science Collaboration, 2015).

Researchers often fail to recognize QRPs as problematic (Linder & Farahbakhsh, 2020). However, systemic oversight, e.g., audits and statistical checks, can deter malpractice (Bottesini et al., 2022; Bouter, 2023). Aligning research incentives with ethical practices is crucial for maintaining credibility and advancing knowledge (Sijtsma, 2016). Institutions should promote data transparency, methodological rigor, and accountability to improve research quality and its application in management decision-making (Harvey & Liu, 2021).

OS initiatives address QRPs by encouraging complete reporting of results and reducing publication bias (Neoh et al., 2023). Preregistering studies and sharing data improves transparency and strengthens scientific integrity (Akker et al., 2023). Business schools and academic institutions are increasingly adopting these reforms, fostering an environment that values research freedom alongside responsibility (Miller et al., 2024; Tsui, 2022).

Hu et al. (2023) advocated for greater transparency in model development and statistical analysis to counter inflated results. Statistical oversight, such as involving statisticians in research teams, reduces errors and improves the validity of the results (Sijtsma, 2016). Ensuring clarity in reviews through frameworks such as PRISMA enhances reproducibility and minimizes biases (Page et al., 2021). Editors and reviewers must also assess whether the hypotheses are robust or derived from QRPs to foster a more trustworthy academic environment.

Graduate education is vital for research integrity because it addresses p-hacking, HARKing, and concerns about reproducibility. Practical training, case studies, and transparency indices can help students recognize and avoid QRPs (Limongi, 2024). Teaching the value of null results is also essential, as research often overemphasizes significant p-values while neglecting the effect sizes (Rigdon, 2023). A shift toward comprehensive statistical reporting will improve the robustness and applicability of scientific findings.

Despite systemic vulnerabilities, peer review and community scrutiny uphold scientific integrity, but articles using QRPs may go unnoticed unless their findings are impactful, prompting post-publication scrutiny and replication. This filtering function, although imperfect, helps science self-correct and prioritize advances in knowledge (Bruns & Ioannidis, 2016; Nosek et al., 2015). This emblematic case highlights the importance of post-publication scrutiny as a complement to traditional peer review, reflecting the self-correcting nature of science, which is grounded in organized skepticism. In management research, where findings often claim practical relevance, this collective filtering becomes essential to distinguish impactful contributions from misleading results.

Therefore, enhancing research integrity requires strengthening collective evaluation and promoting openness, which enables criticism, replication, and accountability. While QRPs remain prevalent, educational and institutional reforms can reduce their impact. The replication crisis presents an opportunity to refine methodology and enhance scientific credibility (Frias-Navarro et al., 2020). Critical engagement, transparent reporting, and rigorous methodological standards strengthen research reliability and ensure meaningful contributions to knowledge (Bühner et al., 2022).

-

The Peer Review Report is available at this link

-

Reviewers: Diogenes de Souza Bido https://orcid.org/0000-0002-8525-5218 , Universidade Presbiteriana Mackenzie, Centro de Ciências Sociais e Aplicadas, São Paulo, SP, Brazil. Leonardo Vils , Universidade Nove de Julho, Programa de Pós-Graduação em Administração, São Paulo, SP, Brazil. The third reviewers did not authorize disclosure of their identity.

-

Evaluated through a double-anonymized peer review. Ad hoc Associate Editor: Marcelo Luiz Dias da Silva Gabriel

-

FUNDING

This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brasil (CAPES) - Finance Code 001The authors thank the Goiás State Research Support Foundation (FAPEG) for the financial support provided for the development of this research.

ACKNOWLEDGEMENTS

The authors thank the Instituto Federal Goiano and the Research Group on Child and Adolescent Health (GPSaCA - https://www.gpsaca.com.br) for supptheir support ofs study.

The authors thank the Universidade Federal de Goiás and the Research Group on AJUS - UFG for supporting this study.

DATA AVAILABILITY

Not applicable. This manuscript is an essay and does not involve the generation or analysis of datasets.

REFERENCES

-

Adler, S. J., Sharma, P. N., & Radomir, L. (2023). Toward open science in PLS-SEM: Assessing the state of the art and future perspectives. Journal of Business Research, 169, 114291. https://doi.org/10.1016/j.jbusres.2023.114291

» https://doi.org/10.1016/j.jbusres.2023.114291 -

Agnoli, F., Wicherts, J. M., Veldkamp, C. L. S., Albiero, P., & Cubelli, R. (2017). Questionable research practices among italian research psychologists. PLOS ONE, 12(3), e0172792. https://doi.org/10.1371/journal.pone.0172792

» https://doi.org/10.1371/journal.pone.0172792 -

Aguinis, H., Banks, G. C., Rogelberg, S. G., & Cascio, W. F. (2020). Actionable recommendations for narrowing the science-practice gap in open science. Organizational Behavior and Human Decision Processes, 158, 27-35. https://doi.org/10.1016/j.obhdp.2020.02.007

» https://doi.org/10.1016/j.obhdp.2020.02.007 -

Akker, O. R. van der, Assen, M. A. L. M. van, Bakker, M., Elsherif, M., Wong, T. K., & Wicherts, J. M. (2023). Preregistration in practice: A comparison of preregistered and non-preregistered studies in psychology. Behavior Research Methods, 56, 5424-5433 https://doi.org/10.3758/s13428-023-02277-0

» https://doi.org/10.3758/s13428-023-02277-0 -

Amrhein, V., Greenland, S., & McShane, B. (2019). Scientists rise up against statistical significance. Nature, 567(7748), 305-307. https://doi.org/10.1038/d41586-019-00857-9

» https://doi.org/10.1038/d41586-019-00857-9 -

Atherton, O. E., Westberg, D. W., Perkins, V., Lawson, K. M., Jeftic, A., Jayawickreme, E., Zhang, S., Hu, Z., McLean, K. C., Bottesini, J. G., Syed, M., & Chung, J. M. (2024). Examining personality psychology to unpack the peer review system: Towards a more diverse, inclusive, and equitable psychological science. European Journal of Personality, 0(0) 1-27 https://doi.org/10.1177/08902070241301629

» https://doi.org/10.1177/08902070241301629 -

Balafoutas, L., Celse, J., Karakostas, A., & Umashev, N. (2025). Incentives and the replication crisis in social sciences: A critical review of open science practices. Journal of Behavioral and Experimental Economics, 114, 102327. https://doi.org/10.1016/j.socec.2024.102327

» https://doi.org/10.1016/j.socec.2024.102327 -

Banner, K. M., Irvine, K. M., & Rodhouse, T. J. (2020). The use of Bayesian priors in Ecology: The good, the bad and the not great. Methods in Ecology and Evolution, 11(8), 882-889. https://doi.org/10.1111/2041-210X.13407

» https://doi.org/10.1111/2041-210X.13407 -

Barth, N. L., & Lourenço, C. E. (2020). O P ainda tem valor? RAE-Revista de Administração de Empresas, 60(3), 235-241. https://doi.org/10.1590/s0034-759020200306

» https://doi.org/10.1590/s0034-759020200306 -

Baruch, Y. (2023). HARKing can be good for science: Why, when, and how c/should we hypothesizing after results are known or proposing research questions after results are known. Human Resource Management Journal, 34(4), 865-878 https://doi.org/10.1111/1748-8583.12534

» https://doi.org/10.1111/1748-8583.12534 -

Blincoe, S., & Buchert, S. (2020). Research preregistration as a teaching and learning tool in undergraduate psychology courses. Psychology Learning & Teaching, 19(1), 107-115. https://doi.org/10.1177/1475725719875844

» https://doi.org/10.1177/1475725719875844 -

Bottesini, J. G., Rhemtulla, M., & Vazire, S. (2022). What do participants think of our research practices? An examination of behavioural psychology participants’ preferences. Royal Society Open Science, 9(4), 1-24. https://doi.org/10.1098/rsos.200048

» https://doi.org/10.1098/rsos.200048 -

Bouter, L. (2023). Research misconduct and questionable research practices form a continuum. Accountability in Research, 31(8), 1255-1259. https://doi.org/10.1080/08989621.2023.2185141

» https://doi.org/10.1080/08989621.2023.2185141 -

Brodeur, A., Cook, N., & Neisser, C. (2024). P-Hacking, data type and data-sharing policy. The Economic Journal, 134(659), 985-1018. https://doi.org/10.1093/ej/uead104

» https://doi.org/10.1093/ej/uead104 -

Bruns, S. B., & Ioannidis, J. P. A. (2016). P-Curve and p-hacking in observational research. PLOS ONE, 11(2), e0149144. https://doi.org/10.1371/journal.pone.0149144

» https://doi.org/10.1371/journal.pone.0149144 -

Bruton, S. V., Medlin, M., Brown, M., & Sacco, D. F. (2020). Personal motivations and systemic incentives: Scientists on questionable research practices. Science and Engineering Ethics, 26(3), 1531-1547. https://doi.org/10.1007/s11948-020-00182-9

» https://doi.org/10.1007/s11948-020-00182-9 -

Bühner, M., Schubert, A.-L., Bermeitinger, C., Bölte, J., Fiebach, C., Renner, K.-H., & Schulz-Hardt, S. (2022). DGPs-Vorstand. Der Kulturwandel in unserer Forschung muss in der Ausbildung unserer Studierenden beginnen. Psychologische Rundschau, 73(1), 18-20. https://doi.org/10.1026/0033-3042/a000563

» https://doi.org/10.1026/0033-3042/a000563 - Chambers, C. (2017). The seven deadly sins of psychology: A manifesto for reforming the culture of scientific practice Princeton University Press.

-

Corneille, O., Havemann, J., Henderson, E. L., IJzerman, H., Hussey, I., Xivry, J.-J. O. de, Jussim, L., Holmes, N. P., Pilacinski, A., Beffara, B., Carroll, H., Outa, N. O., Lush, P., & Lotter, L. D. (2023). Beware ‘persuasive communication devices’ when writing and reading scientific articles. ELife, 12, 1-6 https://doi.org/10.7554/eLife.88654

» https://doi.org/10.7554/eLife.88654 -

Dalen, H. P. van. (2021). How the publish-or-perish principle divides a science: the case of economists. Scientometrics, 126(2), 1675-1694. https://doi.org/10.1007/s11192-020-03786-x

» https://doi.org/10.1007/s11192-020-03786-x -

Depaoli, S., & Schoot, R. van de. (2017). Supplemental material for improving transparency and replication in bayesian statistics: The WAMBS-Checklist. Psychological Methods, 22(2), 240-261 https://doi.org/10.1037/met0000065.supp

» https://doi.org/10.1037/met0000065.supp -

Elliott, G., Kudrin, N., & Wüthrich, K. (2022). Detecting p -hacking. Econometrica, 90(2), 887-906. https://doi.org/10.3982/ECTA18583

» https://doi.org/10.3982/ECTA18583 -

Fahim, A., Sadaf, A., Jafari, F. H., Siddique, K., & Sethi, A. (2024). Questionable research practices of medical and dental faculty in Pakistan: A confession. BMC Medical Ethics, 25(1), 11. https://doi.org/10.1186/s12910-024-01004-4

» https://doi.org/10.1186/s12910-024-01004-4 -

Fanelli, D. (2009). How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS ONE, 4(5), e5738. https://doi.org/10.1371/journal.pone.0005738

» https://doi.org/10.1371/journal.pone.0005738 -

Fraser, H., Parker, T., Nakagawa, S., Barnett, A., & Fidler, F. (2018). Questionable research practices in ecology and evolution. PLOS ONE, 13(7), e0200303. https://doi.org/10.1371/journal.pone.0200303

» https://doi.org/10.1371/journal.pone.0200303 -

Frias-Navarro, D., Pascual-Llobell, J., Pascual-Soler, M., Perezgonzalez, J., & Berrios-Riquelme, J. (2020). Replication crisis or an opportunity to improve scientific production? European Journal of Education, 55(4), 618-631. https://doi.org/10.1111/ejed.12417

» https://doi.org/10.1111/ejed.12417 -

Galak, J., LeBoeuf, R. A., Nelson, L. D., & Simmons, J. P. (2012). Correcting the past: Failures to replicate psi. Journal of Personality and Social Psychology, 103(6), 933-948. https://doi.org/10.1037/a0029709

» https://doi.org/10.1037/a0029709 -

Guo, D., & Ma, Y. (2022). The “p-hacking-is-terrific” ocean: A cartoon for teaching statistics. Teaching Statistics, 44(2), 68-72. https://doi.org/10.1111/test.12305

» https://doi.org/10.1111/test.12305 - Hair, J. F., Jr., Black, W. C., Babin, B. J., & Anderson, R. E. (2014). Multivariate data analysis (7th ed.). Prentice Hall.

- Hankin, C. (2014). Bullseyes Charlie Hankin.

- Harlow, L. L., Mulaik, S. A., & Steiger, J. H. (Eds.). (1997). What if there were no significance tests? Psychology Press.

-

Harvey, C. R., & Liu, Y. (2021). Uncovering the iceberg from its tip: A model of publication bias and p-hacking. SSRN Electronic Journal . https://doi.org/10.2139/ssrn.3865813

» https://doi.org/10.2139/ssrn.3865813 -

Hensel, P. G. (2021). Reproducibility and replicability crisis: How management compares to psychology and economics - A systematic review of literature. European Management Journal, 39(5), 577-594. https://doi.org/10.1016/j.emj.2021.01.002

» https://doi.org/10.1016/j.emj.2021.01.002 -

Honig, B., Lampel, J., Baum, J. A. C., Glynn, M. A., Jing, R., Lounsbury, M., Schüßler, E., Sirmon, D. G., Tsui, A. S., Walsh, J. P., & Witteloostuijn, A. van. (2018). Reflections on scientific misconduct in management: Unfortunate incidents or a normative crisis? Academy of Management Perspectives, 32(4), 412-442. https://doi.org/10.5465/amp.2015.0167

» https://doi.org/10.5465/amp.2015.0167 -

Hu, H., Moody, G., & Galletta, D. (2023). HARKing and p-hacking: A call for more transparent reporting of studies in the information systems field. Communications of the Association for Information Systems, 52, 853-876. https://doi.org/10.17705/1CAIS.05241

» https://doi.org/10.17705/1CAIS.05241 -

Huang, J., & Wang, Y. (2023). Examining Chinese social sciences graduate students’ understanding of research ethics: Implications for their research ethics education. Humanities and Social Sciences Communications, 10(1), 487. https://doi.org/10.1057/s41599-023-02000-6

» https://doi.org/10.1057/s41599-023-02000-6 -

Hudson, R. (2024). Responding to incentives or gaming the system? How UK business academics respond to the Academic Journal Guide. Research Policy, 53(9), 105082. https://doi.org/10.1016/j.respol.2024.105082

» https://doi.org/10.1016/j.respol.2024.105082 -

Ioannidis, J. P. A. (2005). Why most published research findings are false. PLoS Medicine, 2(8), e124. https://doi.org/10.1371/journal.pmed.0020124

» https://doi.org/10.1371/journal.pmed.0020124 -

Ioannidis, J. P. A. (2022). Correction: Why most published research findings are false. PLOS Medicine, 19(8), e1004085. https://doi.org/10.1371/journal.pmed.1004085

» https://doi.org/10.1371/journal.pmed.1004085 -

John, L. K., Loewenstein, G., & Prelec, D. (2012). Measuring the prevalence of questionable research practices with incentives for truth telling. Psychological Science, 23(5), 524-532. https://doi.org/10.1177/0956797611430953

» https://doi.org/10.1177/0956797611430953 -

Kaiser, M., Drivdal, L., Hjellbrekke, J., Ingierd, H., & Rekdal, O. B. (2022). Questionable research practices and misconduct among Norwegian researchers. Science and Engineering Ethics, 28(1), 2. https://doi.org/10.1007/s11948-021-00351-4

» https://doi.org/10.1007/s11948-021-00351-4 -

Kelter, R. (2020). Analysis of Bayesian posterior significance and effect size indices for the two-sample t-test to support reproducible medical research. BMC Medical Research Methodology, 20(1), 88. https://doi.org/10.1186/s12874-020-00968-2

» https://doi.org/10.1186/s12874-020-00968-2 -

Kepes, S., Keener, S. K., McDaniel, M. A., & Hartman, N. S. (2022). Questionable research practices among researchers in the most research-productive management programs. Journal of Organizational Behavior, 43(7), 1190-1208. https://doi.org/10.1002/job.2623

» https://doi.org/10.1002/job.2623 -

Kerr, N. L. (1998). HARKing: Hypothesizing after the results are known. Personality and Social Psychology Review, 2(3), 196-217. https://doi.org/10.1207/s15327957pspr0203_4

» https://doi.org/10.1207/s15327957pspr0203_4 - Kline, R. B. (2023). Principles and practice of structural equation modeling (5th ed.). The Guilford Press.

-

Limongi, R. (2024). The use of artificial intelligence in scientific research with integrity and ethics. Future Studies Research Journal: Trends and Strategies, 16(1), e845. https://doi.org/10.24023/FutureJournal/2175-5825/2024.v16i1.845

» https://doi.org/10.24023/FutureJournal/2175-5825/2024.v16i1.845 -

Limongi, R., & Marcolin, C. B. (2024). AI literacy research: Frontier for high-impact research and ethics. BAR - Brazilian Administration Review, 21(3), 1-5. https://doi.org/10.1590/1807-7692bar2024240162

» https://doi.org/10.1590/1807-7692bar2024240162 -

Linder, C., & Farahbakhsh, S. (2020). Unfolding the black box of questionable research practices: Where is the line between acceptable and unacceptable practices? Business Ethics Quarterly, 30(3), 335-360. https://doi.org/10.1017/beq.2019.52

» https://doi.org/10.1017/beq.2019.52 -

Martins, H. C., & Mendes-da-Silva, W. (2024). Ciência aberta na RAE: Quais os próximos passos? RAE-Revista de Administração de Empresas, 64(4), 1-6. https://doi.org/10.1590/s0034-759020240407

» https://doi.org/10.1590/s0034-759020240407 -

Merkle, E. C., Ariyo, O., Winter, S. D., & Garnier-Villarreal, M. (2023). Opaque prior distributions in Bayesian latent variable models. Methodology, 19(3), 228-255. https://doi.org/10.5964/meth.11167

» https://doi.org/10.5964/meth.11167 -

Miller, S. R., Moore, F., & Eden, L. (2024). Ethics and international business research: Considerations and best practices. International Business Review, 33(1), 102207. https://doi.org/10.1016/j.ibusrev.2023.102207

» https://doi.org/10.1016/j.ibusrev.2023.102207 -

Moody, J. W., Keister, L. A., & Ramos, M. C. (2022). Reproducibility in the social sciences. Annual Review of Sociology, 48(1), 65-85. https://doi.org/10.1146/annurev-soc-090221-035954

» https://doi.org/10.1146/annurev-soc-090221-035954 -

Murphy, K. R., & Aguinis, H. (2019). HARKing: How badly can cherry-picking and question trolling produce bias in published results? Journal of Business and Psychology, 34(1), 1-17. https://doi.org/10.1007/s10869-017-9524-7

» https://doi.org/10.1007/s10869-017-9524-7 -

Nair, L. B., & Ascani, A. (2022). Addressing low-profile misconduct in management academia through theoretical triangulation and transformative ethics education. The International Journal of Management Education, 20(3), 100728. https://doi.org/10.1016/j.ijme.2022.100728

» https://doi.org/10.1016/j.ijme.2022.100728 - National Academies of Sciences, Engineering, and Medicine (2017). Fostering integrity in research The National Academies Press.

-

Neoh, M. J. Y., Carollo, A., Lee, A., & Esposito, G. (2023). Fifty years of research on questionable research practises in science: Quantitative analysis of co-citation patterns. Royal Society Open Science, 10(10), 1-14. https://doi.org/10.1098/rsos.230677

» https://doi.org/10.1098/rsos.230677 -

Ni, S., & Sun, D. (2003). Noninformative priors and frequentist risks of Bayesian estimators of vector-autoregressive models. Journal of Econometrics, 115(1), 159-197. https://doi.org/10.1016/S0304-4076(03)00099-X

» https://doi.org/10.1016/S0304-4076(03)00099-X -

Nosek, B. A., Alter, G., Banks, G. C., Borsboom, D., Bowman, S. D., Breckler, S. J., Buck, S., Chambers, C. D., Chin, G., Christensen, G., Contestabile, M., Dafoe, A., Eich, E., Freese, J., Glennerster, R., Goroff, D., Green, D. P., Hesse, B., Humphreys, M., … Yarkoni, T. (2015). Promoting an open research culture. Science, 348(6242), 1422-1425. https://doi.org/10.1126/science.aab2374

» https://doi.org/10.1126/science.aab2374 -

Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349(6251), 943-951. https://doi.org/10.1126/science.aac4716

» https://doi.org/10.1126/science.aac4716 -

Page, M. J., Moher, D., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … McKenzie, J. E. (2021). PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ, 372 (160), 1-36. https://doi.org/10.1136/bmj.n160

» https://doi.org/10.1136/bmj.n160 -

Page, M. J., Shamseer, L., & Tricco, A. C. (2018). Registration of systematic reviews in PROSPERO: 30,000 records and counting. Systematic Reviews, 7(1), 32. https://doi.org/10.1186/s13643-018-0699-4

» https://doi.org/10.1186/s13643-018-0699-4 -

Pereira, C. A. B., & Stern, J. M. (2022). The e-value: A fully Bayesian significance measure for precise statistical hypotheses and its research program. São Paulo Journal of Mathematical Sciences, 16(1), 566-584. https://doi.org/10.1007/s40863-020-00171-7

» https://doi.org/10.1007/s40863-020-00171-7 - Popper, K. (2017). Logik der Forschung (The Logic of Scientific Discovery) Verlag von Julius Springer.

-

Promoting reproducibility with registered reports. (2017) Nature Human Behaviour, 1(1), 34. https://doi.org/10.1038/s41562-016-0034

» https://doi.org/10.1038/s41562-016-0034 -

Rigdon, E. E. (2023). How improper dichotomization and the misrepresentation of uncertainty undermine social science research. Journal of Business Research, 165, 114086. https://doi.org/10.1016/j.jbusres.2023.114086

» https://doi.org/10.1016/j.jbusres.2023.114086 - Ritchie, S. (2020). Science fictions: How fraud, bias, negligence, and hype undermine the search for truth Metropolitan Books.

-

Röver, C., Bender, R., Dias, S., Schmid, C. H., Schmidli, H., Sturtz, S., Weber, S., & Friede, T. (2021). On weakly informative prior distributions for the heterogeneity parameter in Bayesian random-effects meta-analysis. Research Synthesis Methods, 12(4), 448-474. https://doi.org/10.1002/jrsm.1475

» https://doi.org/10.1002/jrsm.1475 -

Sarstedt, M., & Adler, S. J. (2023). An advanced method to streamline p-hacking. Journal of Business Research, 163, 113942. https://doi.org/10.1016/j.jbusres.2023.113942

» https://doi.org/10.1016/j.jbusres.2023.113942 -

Schwab, A., & Starbuck, W. H. (2024). How Muriel’s Tea stained management research through statistical significance tests. Journal of Management Inquiry, 34(2), 226-230. https://doi.org/10.1177/10564926241257164

» https://doi.org/10.1177/10564926241257164 -

Sijtsma, K. (2016). Playing with data: Or how to discourage questionable research practices and stimulate researchers to do things right. Psychometrika, 81(1), 1-15. https://doi.org/10.1007/s11336-015-9446-0

» https://doi.org/10.1007/s11336-015-9446-0 -

Sijtsma, K., Veldkamp, C. L. S., & Wicherts, J. M. (2016). Improving the conduct and reporting of statistical analysis in psychology. Psychometrika, 81(1), 33-38. https://doi.org/10.1007/s11336-015-9444-2

» https://doi.org/10.1007/s11336-015-9444-2 -

Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2011). False-positive psychology. Psychological Science, 22(11), 1359-1366. https://doi.org/10.1177/0956797611417632

» https://doi.org/10.1177/0956797611417632 -

Smid, S. C., & Winter, S. D. (2020). Dangers of the defaults: A tutorial on the impact of default priors when using Bayesian SEM with small samples. Frontiers in Psychology, 11, 1-11. https://doi.org/10.3389/fpsyg.2020.611963

» https://doi.org/10.3389/fpsyg.2020.611963 -

Speicher, P. J. (2021). Commentary: Statistical adjustment disorder - The limits of propensity scores. The Journal of Thoracic and Cardiovascular Surgery, 162(4), 1255-1256. https://doi.org/10.1016/j.jtcvs.2020.10.104

» https://doi.org/10.1016/j.jtcvs.2020.10.104 -

Stefan, A. M., & Schönbrodt, F. D. (2023). Big little lies: A compendium and simulation of p-Hacking strategies. Royal Society Open Science, 10(2), 1-30. https://doi.org/10.1098/rsos.220346

» https://doi.org/10.1098/rsos.220346 -

Syrjänen, P. (2023). Novel prediction and the problem of low-quality accommodation. Synthese, 202(6), 182. https://doi.org/10.1007/s11229-023-04400-2

» https://doi.org/10.1007/s11229-023-04400-2 -

Tsui, A. S. (2022). From traditional research to responsible research: The necessity of scientific freedom and scientific responsibility for better societies. Annual Review of Organizational Psychology and Organizational Behavior, 9(1), 1-32. https://doi.org/10.1146/annurev-orgpsych-062021-021303

» https://doi.org/10.1146/annurev-orgpsych-062021-021303 -

Wood, T., Souza, R., & Caldas, M. P. (2022). The relevance of management research debate: A historical view, 1876-2018. Journal of Management History, 28(3), 409-427. https://doi.org/10.1108/JMH-10-2021-0056

» https://doi.org/10.1108/JMH-10-2021-0056 -

Zhao, R., Li, Z., & Huang, Y. (2021). Correlation research on the practice of school administrative ethics and teachers’ job morale and job involvement. Revista de Cercetare Si Interventie Sociala, 72, 44-55. https://doi.org/10.33788/rcis.72.3

» https://doi.org/10.33788/rcis.72.3

Publication Dates

-

Publication in this collection

28 Nov 2025 -

Date of issue

2025

History

-

Received

26 Aug 2024 -

Accepted

14 Aug 2025

FROM ETHICAL CONDUCT TO RESPONSIBLE SCIENCE IN MANAGEMENT RESEARCH

FROM ETHICAL CONDUCT TO RESPONSIBLE SCIENCE IN MANAGEMENT RESEARCH

Note: The results are achieved before the hypothesis is formulated, as if the researcher was ‘aiming after the shot.’Source:

Note: The results are achieved before the hypothesis is formulated, as if the researcher was ‘aiming after the shot.’Source: